Building a Formula 1 Streaming Data Pipeline With Kafka and Risingwave

KDnuggets

SEPTEMBER 5, 2023

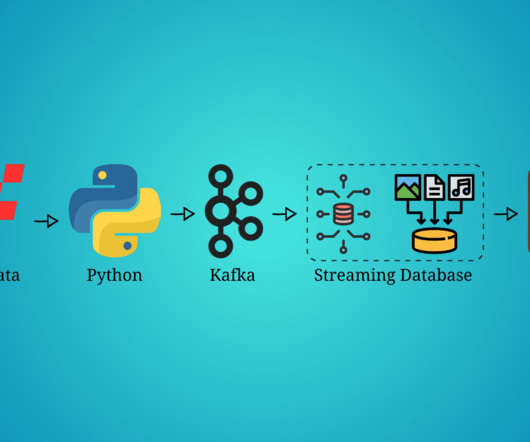

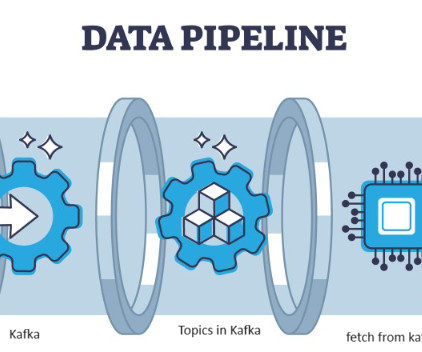

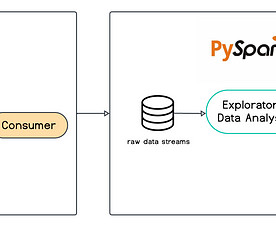

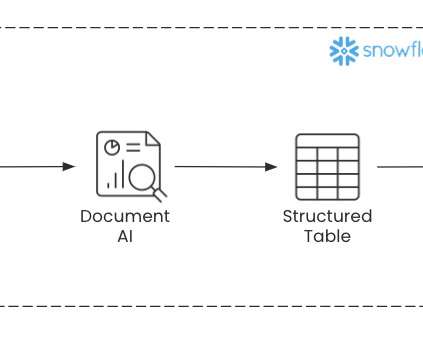

Build a streaming data pipeline using Formula 1 data, Python, Kafka, RisingWave as the streaming database, and visualize all the real-time data in Grafana.

Let's personalize your content