The 40-hour LLM application roadmap: Learn to build your own LLM applications from scratch

Data Science Dojo

AUGUST 9, 2023

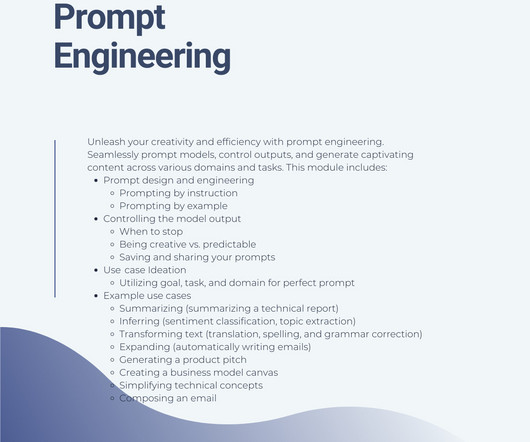

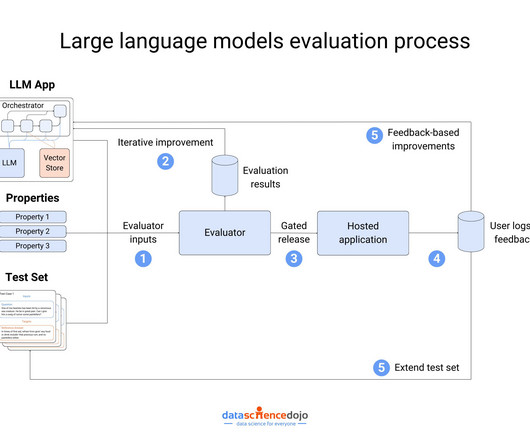

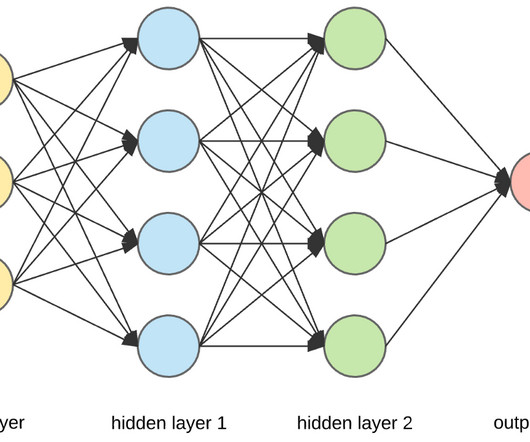

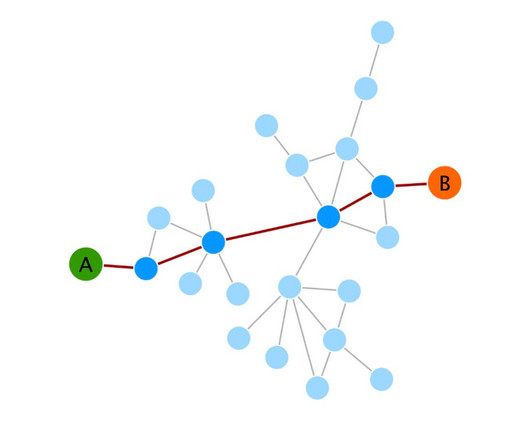

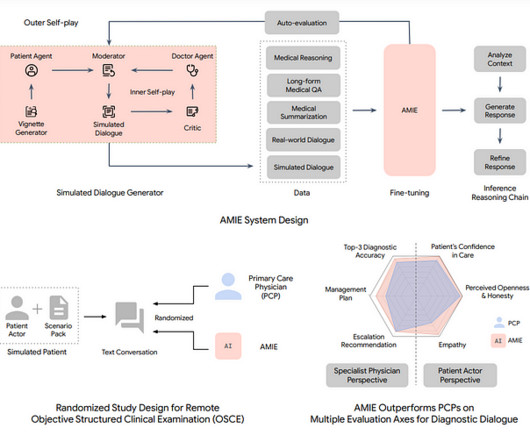

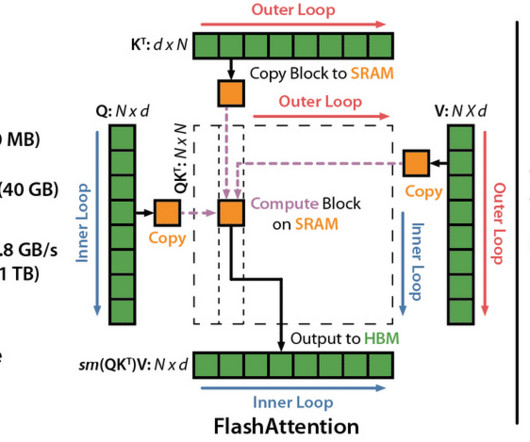

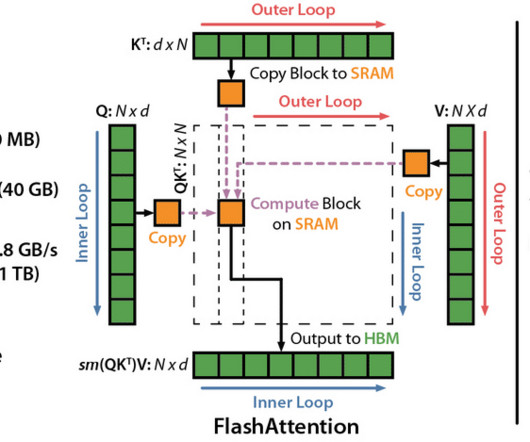

So, whether you’re a student, a software engineer, or a business leader, we encourage you to read on! Emerging architectures for LLM applications: There are a number of emerging architectures for LLM applications, such as Transformer-based models, graph neural networks, and Bayesian models.

Let's personalize your content