Building a Machine Learning Feature Platform with Snowflake, dbt, & Airflow

phData

OCTOBER 27, 2023

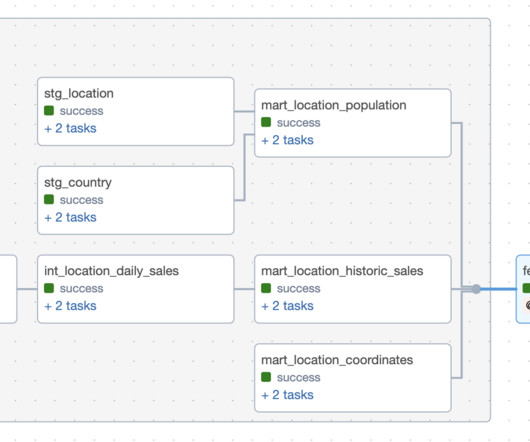

After setup, your feature store knows where to pull features, but how do you go about updating your features? Creating the Feature Store This demo uses Feast as the feature store, Snowflake as the offline store, and Redis as the online store. Constructing Feature Engineering Pipelines Setting up a feature store is only a few steps.

Let's personalize your content