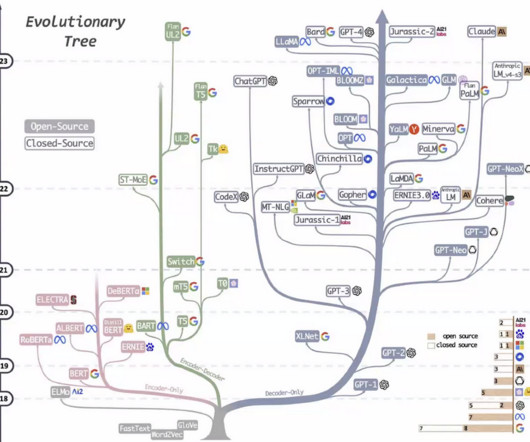

Introducing Llama 2: Six methods to access the open-source large language model

Data Science Dojo

OCTOBER 25, 2023

In this blog, we will be getting started with the Llama 2 open-source large language model. We will guide you through various methods of accessing it, ensuring that by the end, you will be well-equipped to unlock the power of this remarkable language model for your projects.

Let's personalize your content