Improve multi-hop reasoning in LLMs by learning from rich human feedback

AWS Machine Learning Blog

APRIL 27, 2023

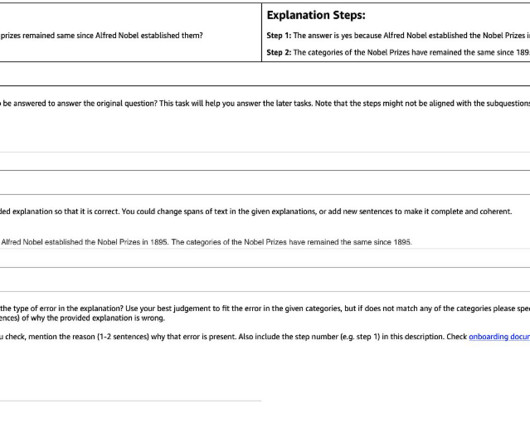

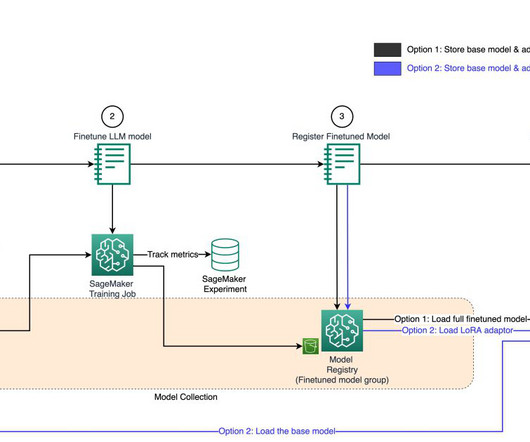

Recent large language models (LLMs) have enabled tremendous progress in natural language understanding. Instead of collecting the reasoning chains from scratch by asking humans, we instead learn from rich human feedback on model-generated reasoning chains using the prompting abilities of the LLMs.

Let's personalize your content