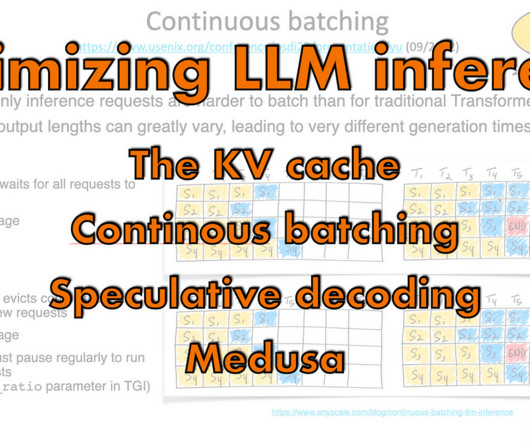

KV-Runahead: Scalable Causal LLM Inference by Parallel Key-Value Cache Generation

Machine Learning Research at Apple

MAY 13, 2024

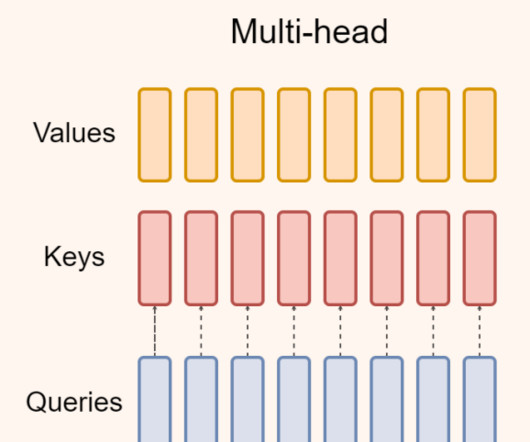

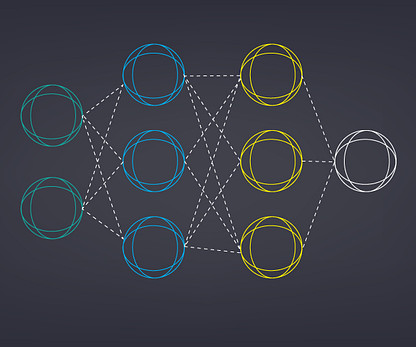

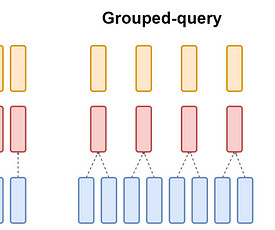

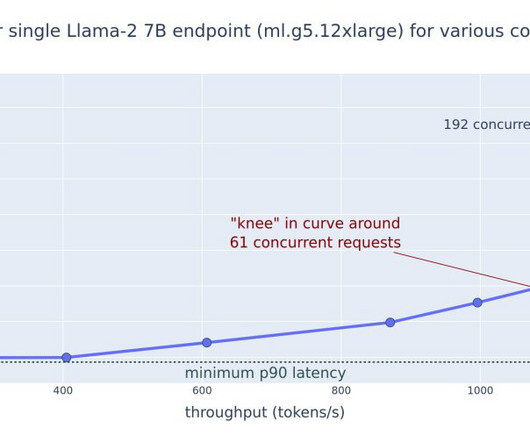

In this work, we propose an efficient parallelization scheme, KV-Runahead to accelerate the prompt phase. The key observation is that the extension phase generates tokens faster than the prompt phase because of key-value cache (KV-cache).

Let's personalize your content