RAG vs finetuning: Which is the best tool for optimized LLM performance?

Data Science Dojo

MARCH 20, 2024

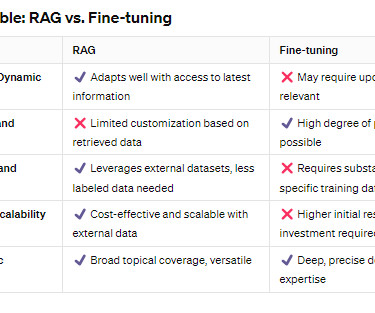

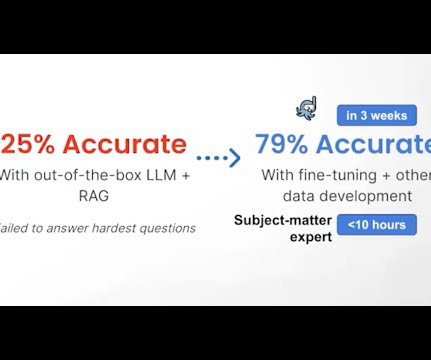

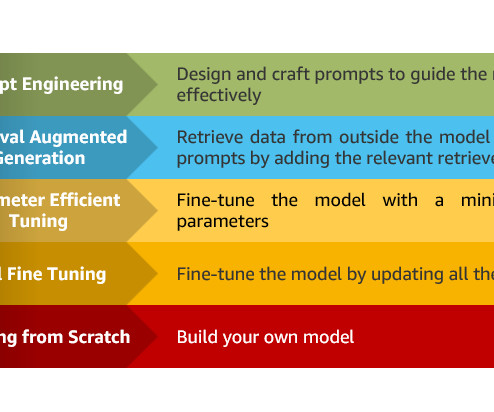

This is the second blog in the series of RAG and finetuning, highlighting a detailed comparison of the two approaches. Let’s explore and address the RAG vs finetuning debate to determine the best tool to optimize LLM performance.

Let's personalize your content