Embedding techniques: A way to empower language models

Data Science Dojo

FEBRUARY 8, 2024

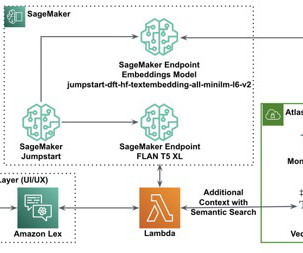

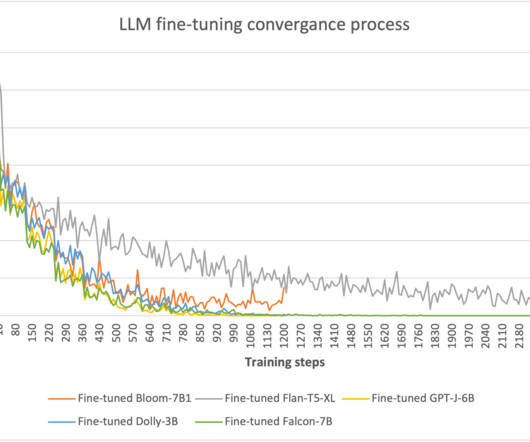

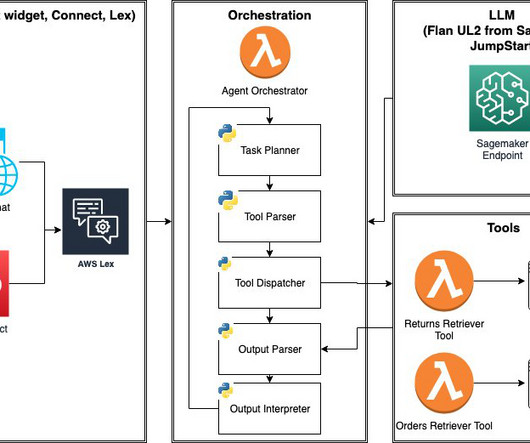

Since NLP techniques operate on textual data, which inherently cannot be directly integrated into machine learning models designed to process numerical inputs, a fundamental question arose: how can we convert text into a format compatible with these models? How are enterprises using embeddings in their LLM processes?

Let's personalize your content