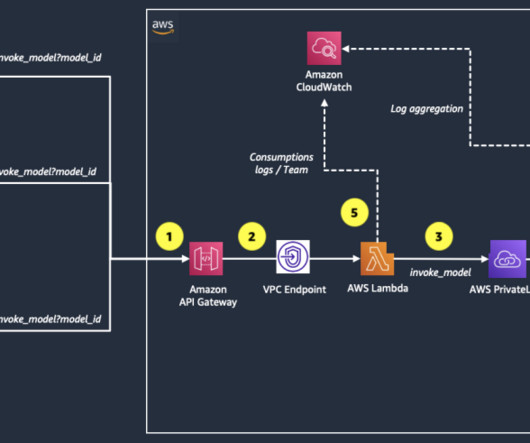

Build an internal SaaS service with cost and usage tracking for foundation models on Amazon Bedrock

AWS Machine Learning Blog

FEBRUARY 9, 2024

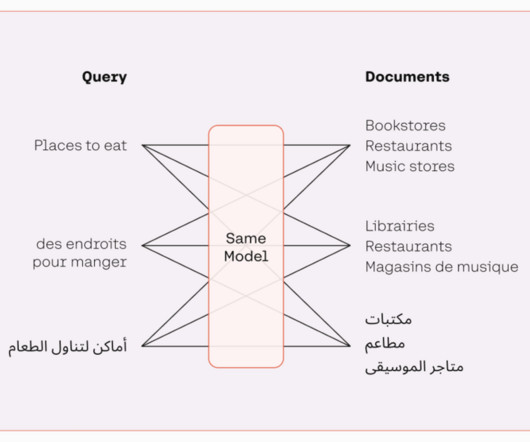

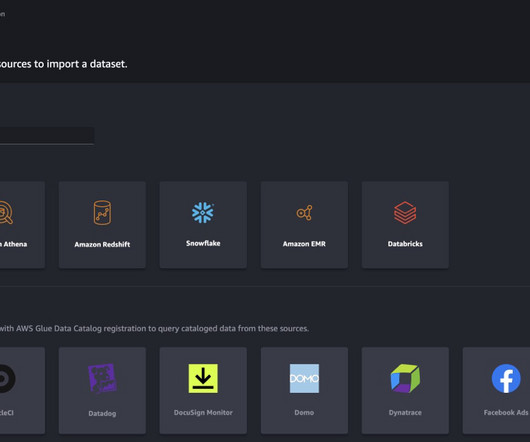

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon via a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

Let's personalize your content