Simplify continuous learning of Amazon Comprehend custom models using Comprehend flywheel

AWS Machine Learning Blog

MARCH 1, 2023

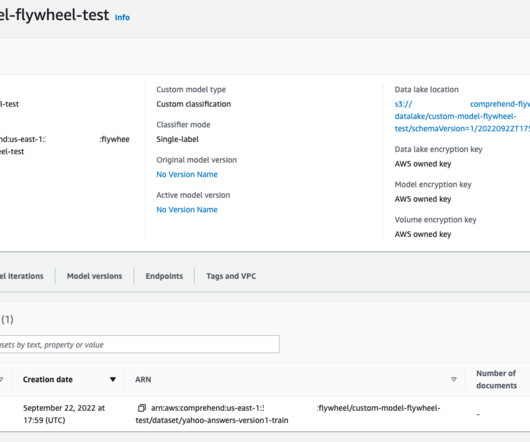

Please refer to section 4, “Preparing data,” from the post Building a custom classifier using Amazon Comprehend for the script and detailed information on data preparation and structure. Admin:~/environment $ aws s3 cp s3://aws-blogs-artifacts-public/artifacts/ML-13607/custom-classifier-complete-dataset.csv.

Let's personalize your content