Streamline diarization using AI as an assistive technology: ZOO Digital’s story

AWS Machine Learning Blog

FEBRUARY 20, 2024

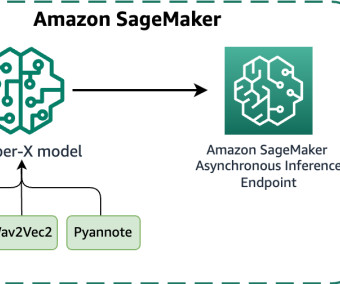

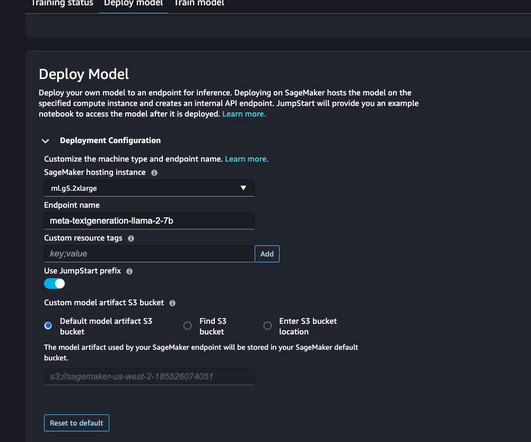

Trusted by the biggest names in entertainment, ZOO Digital delivers high-quality localization and media services at scale, including dubbing, subtitling, scripting, and compliance. In the following sections, we delve into the details of deploying the WhisperX model on SageMaker, and evaluate the diarization performance.

Let's personalize your content