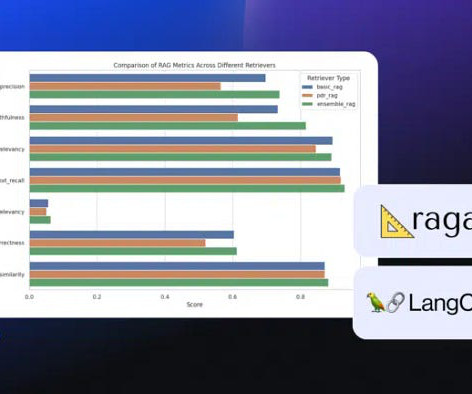

Evaluating RAG Metrics Across Different Retrieval Methods

Towards AI

FEBRUARY 3, 2024

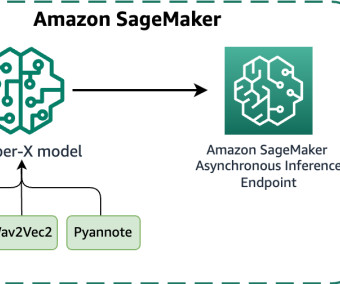

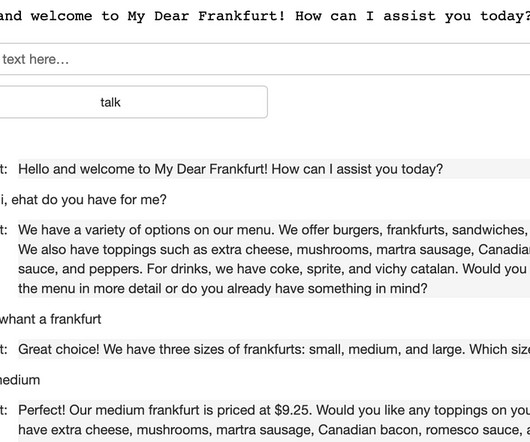

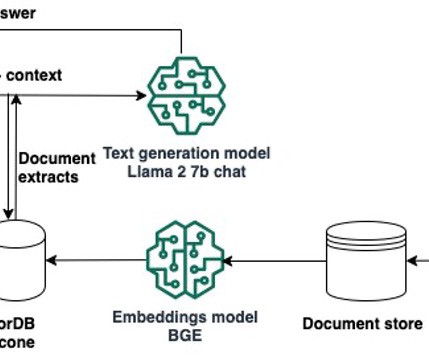

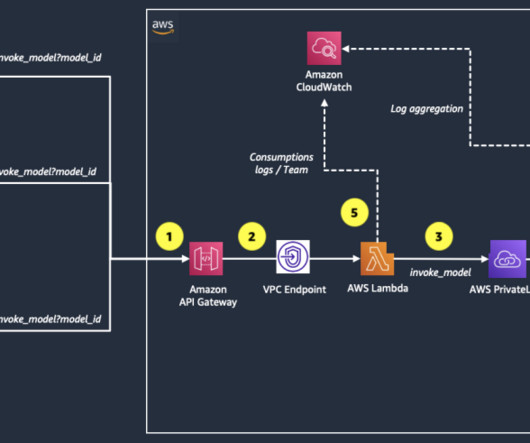

By Author In this post, you’ll learn about creating synthetic data, evaluating RAG pipelines using the Ragas tool, and understanding how various retrieval methods shape your RAG evaluation metrics. U+1F6E0️ Utilizing the Ragas Tool: Learning how to use Ragas to assess RAG model performance across various metrics comprehensively.

Let's personalize your content