Streamline diarization using AI as an assistive technology: ZOO Digital’s story

AWS Machine Learning Blog

FEBRUARY 20, 2024

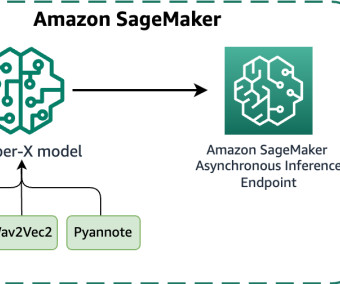

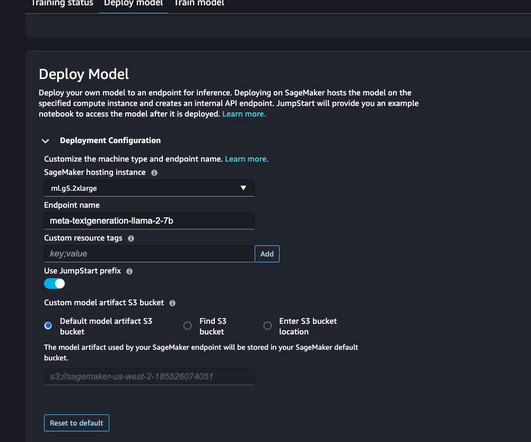

SageMaker asynchronous endpoints support upload sizes up to 1 GB and incorporate auto scaling features that efficiently mitigate traffic spikes and save costs during off-peak times. At the time of writing, using the faster-whisper Large V2 model, the resulting tarball representing the SageMaker model is 3 GB in size.

Let's personalize your content