LlamaSherpa: Revolutionizing Document Chunking for LLMs

Heartbeat

DECEMBER 7, 2023

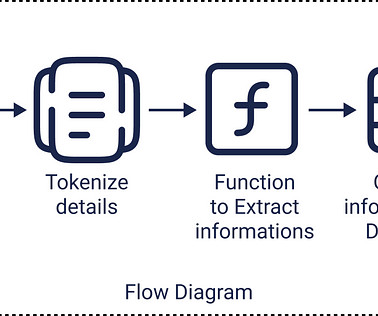

Result : The doc variable now holds a Document object that contains the structured data parsed from the PDF. type(doc) # llmsherpa.readers.layout_reader.Document Retrieving Chunks from the PDF The chunks method provides coherent pieces or segments of content from the parsed PDF. Let’s get some preliminaries out of the way: %%capture !pip

Let's personalize your content