A Complete Guide on Building an ETL Pipeline for Beginners

Analytics Vidhya

JUNE 13, 2022

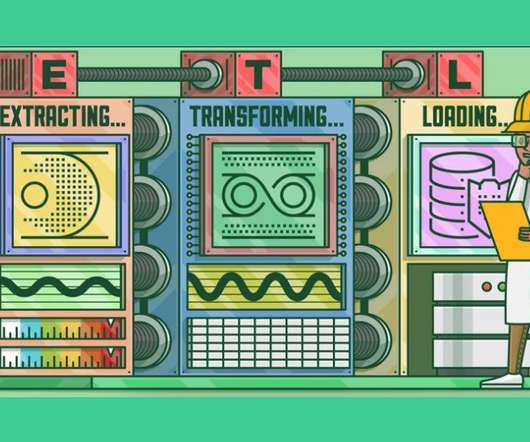

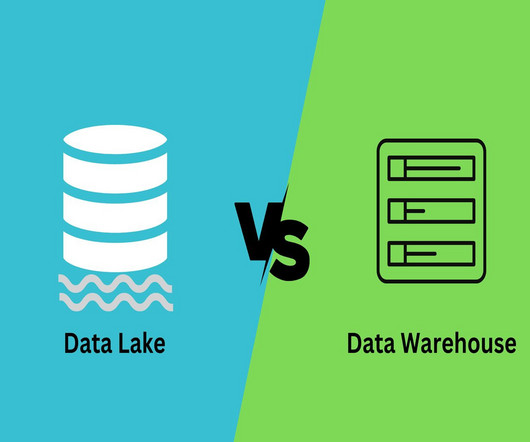

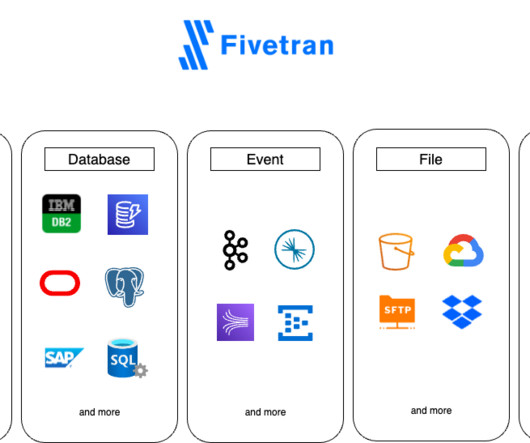

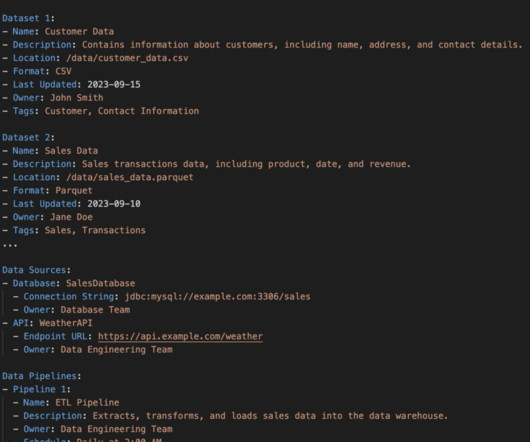

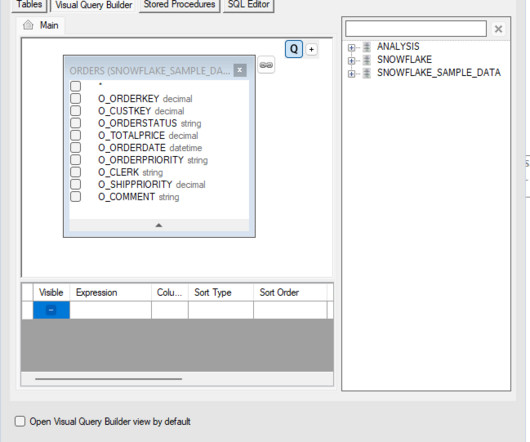

This article was published as a part of the Data Science Blogathon. Introduction on ETL Pipeline ETL pipelines are a set of processes used to transfer data from one or more sources to a database, like a data warehouse.

Let's personalize your content