What exactly is Data Profiling: It’s Examples & Types

Pickl AI

AUGUST 31, 2023

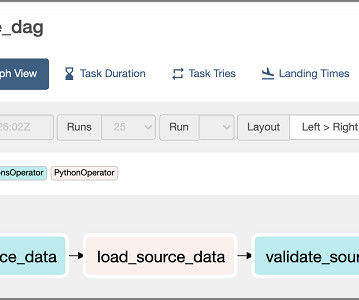

Accordingly, the need for Data Profiling in ETL becomes important for ensuring higher data quality as per business requirements. The following blog will provide you with complete information and in-depth understanding on what is data profiling and its benefits and the various tools used in the method.

Let's personalize your content