ETL Pipeline using Shell Scripting | Data Pipeline

Analytics Vidhya

JANUARY 5, 2022

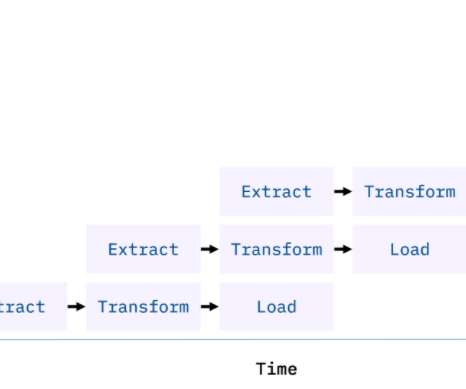

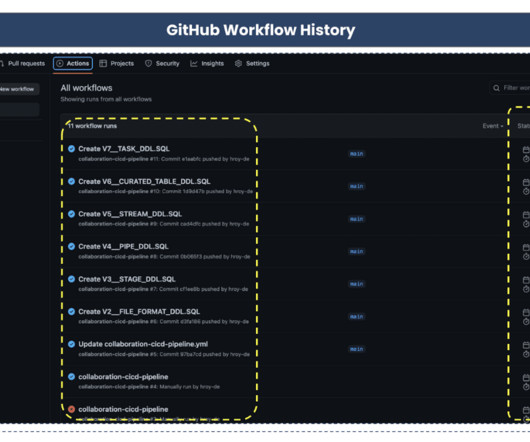

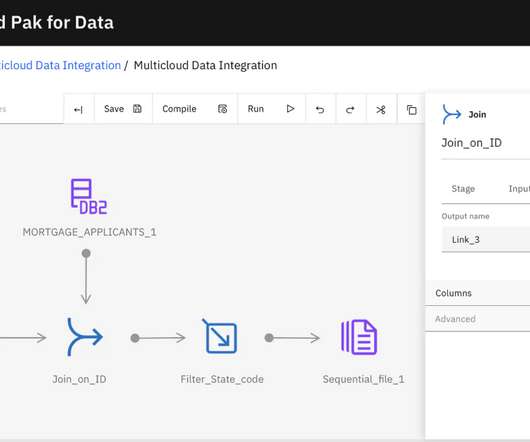

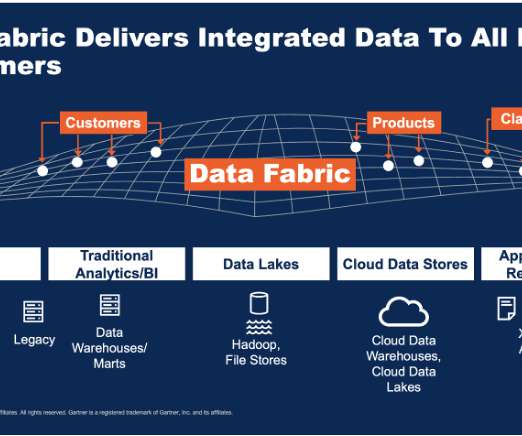

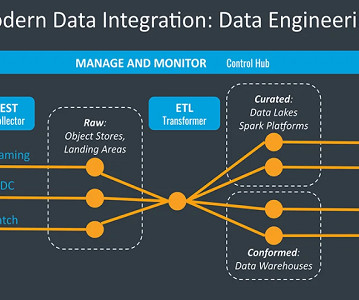

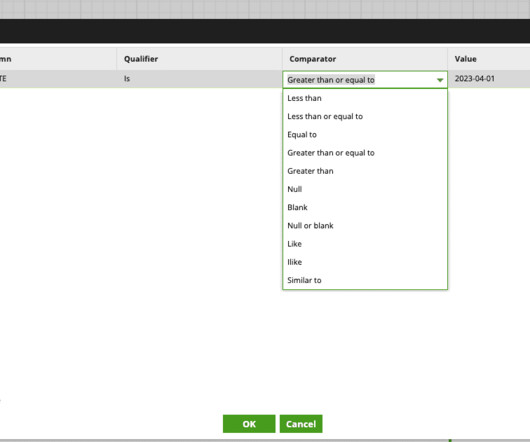

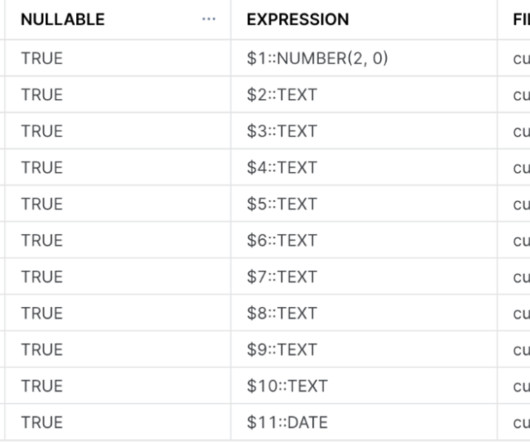

Introduction ETL pipelines can be built from bash scripts. You will learn about how shell scripting can implement an ETL pipeline, and how ETL scripts or tasks can be scheduled using shell scripting. The post ETL Pipeline using Shell Scripting | Data Pipeline appeared first on Analytics Vidhya.

Let's personalize your content