Top 10 Hadoop Interview Questions You Must Know

Analytics Vidhya

FEBRUARY 27, 2023

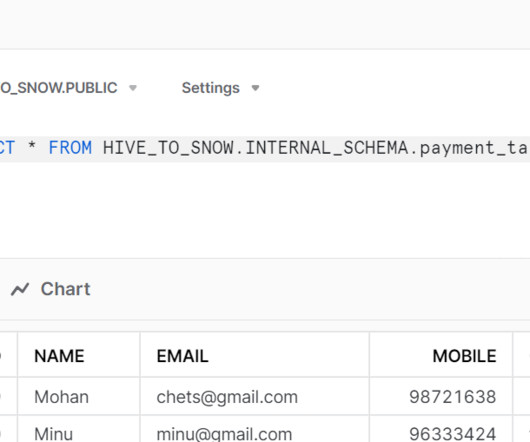

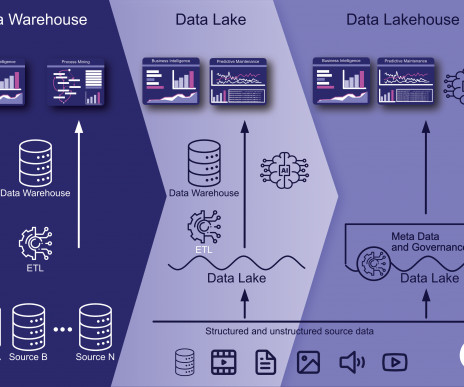

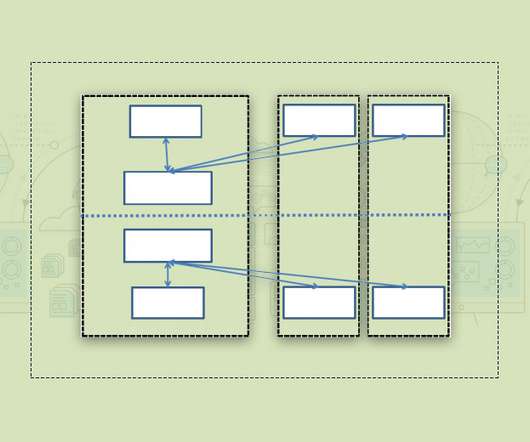

Introduction The Hadoop Distributed File System (HDFS) is a Java-based file system that is Distributed, Scalable, and Portable. Due to its lack of POSIX conformance, some believe it to be data storage instead. HDFS and […] The post Top 10 Hadoop Interview Questions You Must Know appeared first on Analytics Vidhya.

Let's personalize your content