CI/CD for Data Pipelines: A Game-Changer with AnalyticsCreator

Data Science Blog

MAY 20, 2024

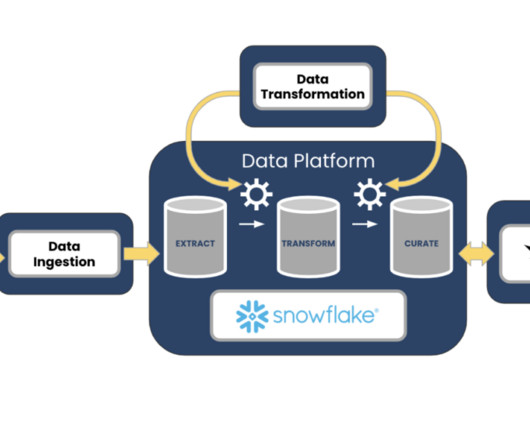

Continuous Integration and Continuous Delivery (CI/CD) for Data Pipelines: It is a Game-Changer with AnalyticsCreator! The need for efficient and reliable data pipelines is paramount in data science and data engineering. They transform data into a consistent format for users to consume.

Let's personalize your content