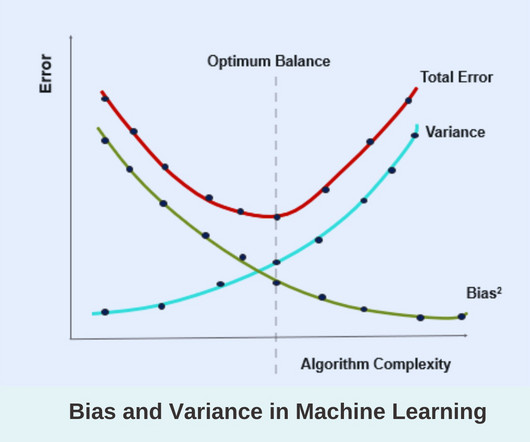

Bias and Variance in Machine Learning

Pickl AI

JULY 26, 2023

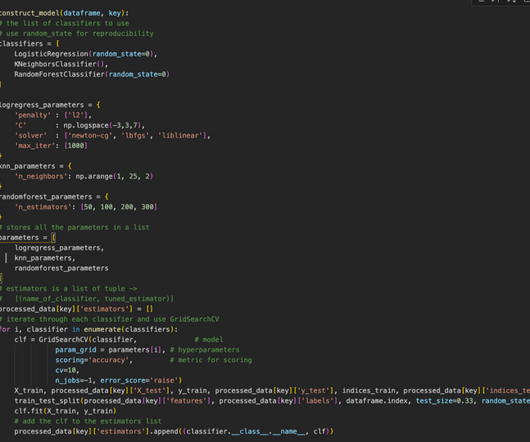

Highly Flexible Neural Networks Deep neural networks with a large number of layers and parameters have the potential to memorize the training data, resulting in high variance. K-Nearest Neighbors with Small k I n the k-nearest neighbours algorithm, choosing a small value of k can lead to high variance.

Let's personalize your content