How a Delta Lake is Process with Azure Synapse Analytics

Analytics Vidhya

JULY 29, 2022

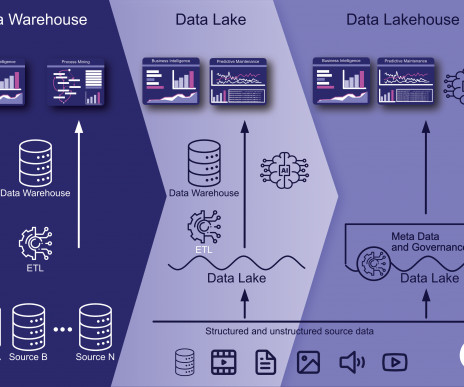

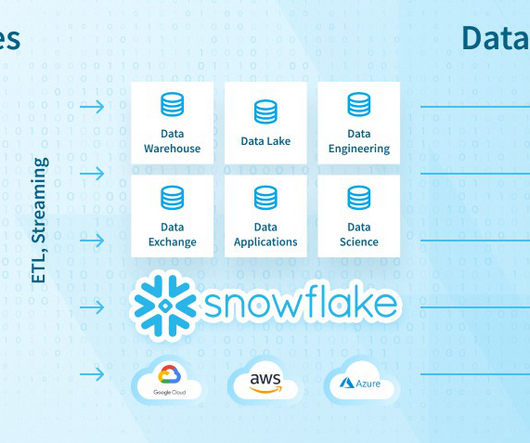

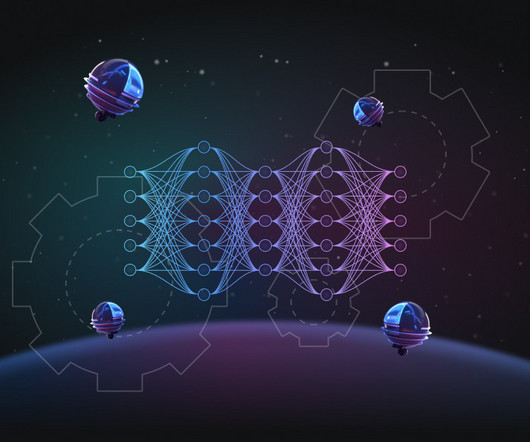

This article was published as a part of the Data Science Blogathon. Introduction We are all pretty much familiar with the common modern cloud data warehouse model, which essentially provides a platform comprising a data lake (based on a cloud storage account such as Azure Data Lake Storage Gen2) AND a data warehouse compute engine […].

Let's personalize your content