How the right data and AI foundation can empower a successful ESG strategy

IBM Journey to AI blog

APRIL 10, 2023

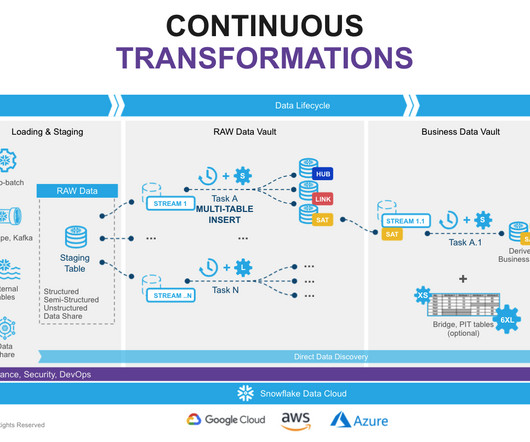

A well-designed data architecture should support business intelligence and analysis, automation, and AI—all of which can help organizations to quickly seize market opportunities, build customer value, drive major efficiencies, and respond to risks such as supply chain disruptions.

Let's personalize your content