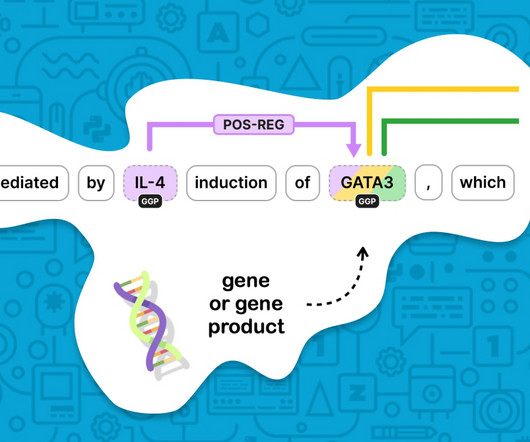

spaCy meets Transformers: Fine-tune BERT, XLNet and GPT-2

Explosion

AUGUST 1, 2019

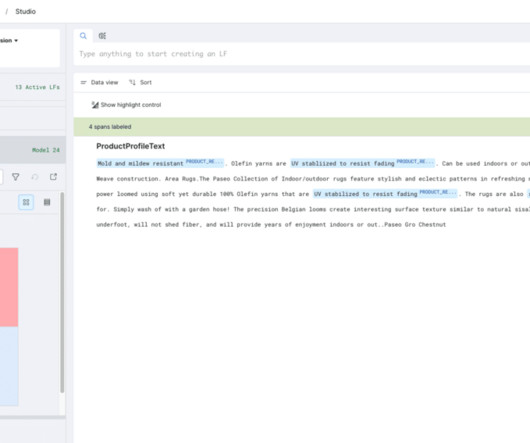

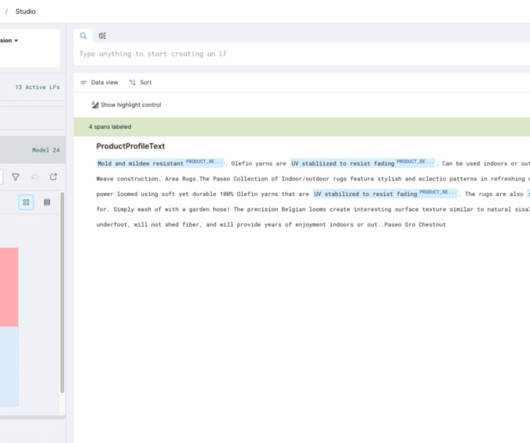

Huge transformer models like BERT, GPT-2 and XLNet have set a new standard for accuracy on almost every NLP leaderboard. You can now use these models in spaCy , via a new interface library we’ve developed that connects spaCy to Hugging Face ’s awesome implementations. We have updated our library and this blog post accordingly.

Let's personalize your content