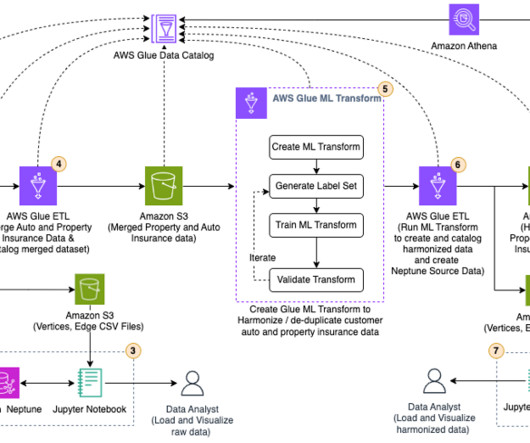

Harmonize data using AWS Glue and AWS Lake Formation FindMatches ML to build a customer 360 view

JUNE 26, 2023

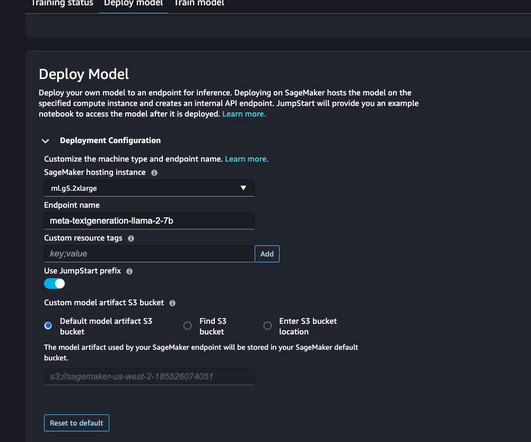

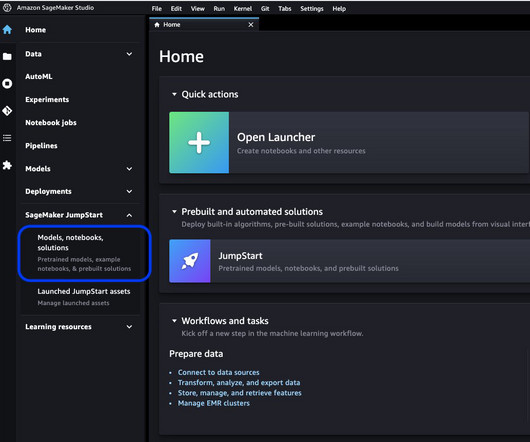

Transform raw insurance data into CSV format acceptable to Neptune Bulk Loader , using an AWS Glue extract, transform, and load (ETL) job. When the data is in CSV format, use an Amazon SageMaker Jupyter notebook to run a PySpark script to load the raw data into Neptune and visualize it in a Jupyter notebook. Start Jupyter Notebook.

Let's personalize your content