LLM Inference Performance Engineering: Best Practices

databricks

OCTOBER 12, 2023

In this blog post, the MosaicML engineering team shares best practices for how to capitalize on popular open source large language models (LLMs).

This site uses cookies to improve your experience. By viewing our content, you are accepting the use of cookies. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country we will assume you are from the United States. View our privacy policy and terms of use.

llm-inference-performance-engineering-best-practices

llm-inference-performance-engineering-best-practices  Blog Related Topics

Blog Related Topics

databricks

OCTOBER 12, 2023

In this blog post, the MosaicML engineering team shares best practices for how to capitalize on popular open source large language models (LLMs).

Data Science Dojo

AUGUST 28, 2023

Large Language Model Ops also known as LLMOps isn’t just a buzzword; it’s the cornerstone of unleashing LLM potential. As LLMs redefine AI capabilities, mastering LLMOps becomes your compass in this dynamic landscape. To acquire insights into building your own LLM, refer to our resources. What is LLMOps?

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

How to Optimize the Developer Experience for Monumental Impact

Generative AI Deep Dive: Advancing from Proof of Concept to Production

Understanding User Needs and Satisfying Them

Beyond the Basics of A/B Tests: Highly Innovative Experimentation Tactics You Need to Know

Leading the Development of Profitable and Sustainable Products

Data Science Dojo

MARCH 29, 2024

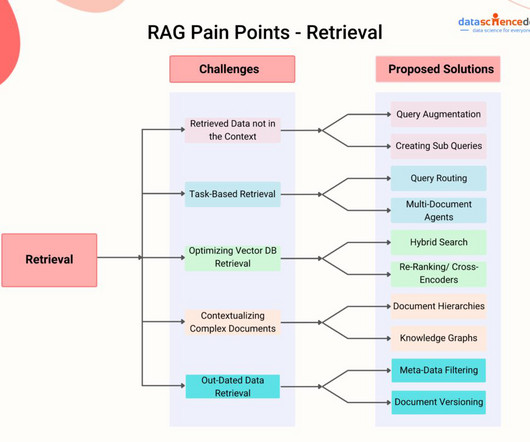

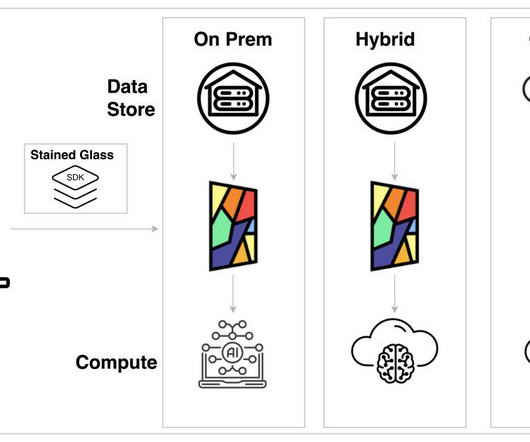

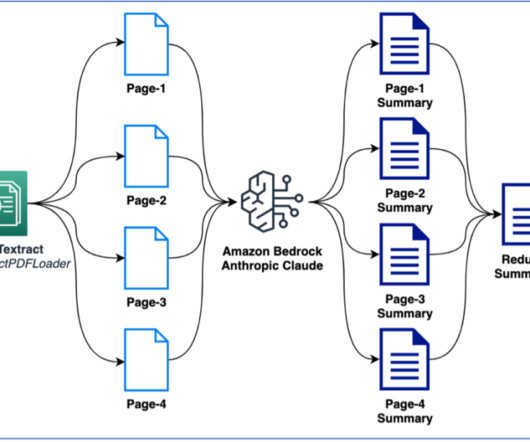

Understanding RAG RAG is a framework that retrieves data from external sources and incorporates it into the LLM’s decision-making process. The retrieved data is synthesized with the LLM’s internal training data to generate a response. This allows the model to access real-time information and address knowledge gaps.

How to Optimize the Developer Experience for Monumental Impact

Generative AI Deep Dive: Advancing from Proof of Concept to Production

Understanding User Needs and Satisfying Them

Beyond the Basics of A/B Tests: Highly Innovative Experimentation Tactics You Need to Know

Leading the Development of Profitable and Sustainable Products

AWS Machine Learning Blog

MAY 9, 2024

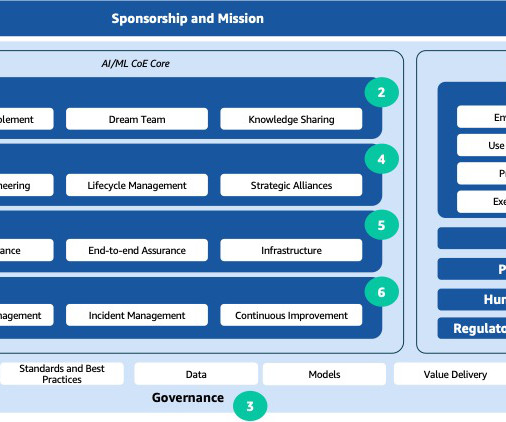

They establish and enforce best practices encompassing design, development, processes, and governance operations, thereby mitigating risks and making sure robust business, technical, and governance frameworks are consistently upheld.

AWS Machine Learning Blog

OCTOBER 6, 2023

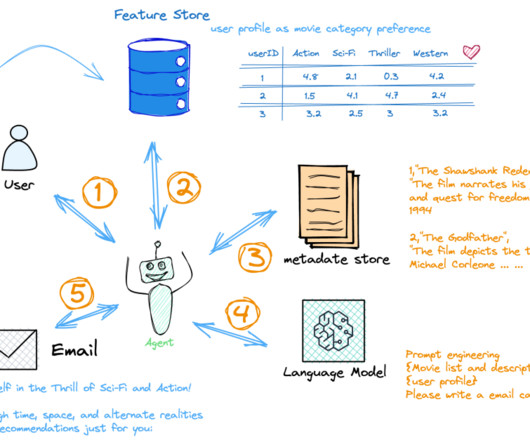

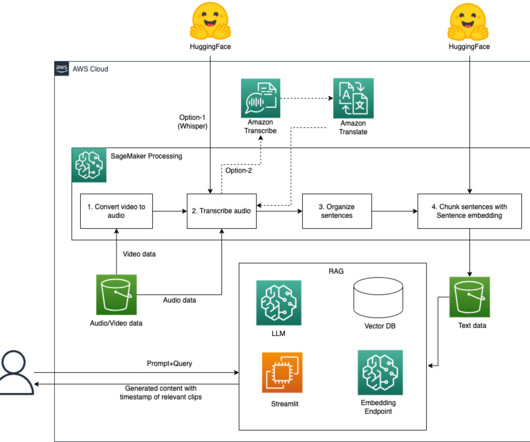

Large language models (LLMs) are revolutionizing fields like search engines, natural language processing (NLP), healthcare, robotics, and code generation. The personalization of LLM applications can be achieved by incorporating up-to-date user information, which typically involves integrating several components.

Heartbeat

JANUARY 9, 2024

The smooth deployment, continuous monitoring, and effective maintenance of LLMs within production systems are major concerns in the field of LLMOps. Solving these concerns entails creating procedures and techniques to guarantee that these potent language models perform as intended and provide accurate results in practical applications.

Hacker News

NOVEMBER 15, 2023

The paper, with coauthors from the former Facebook AI Research (now Meta AI), University College London and New York University, called RAG “a general-purpose fine-tuning recipe” because it can be used by nearly any LLM to connect with practically any external resource. Another great advantage of RAG is it’s relatively easy.

Iguazio

DECEMBER 14, 2023

Drawing from their extensive experience in the field, the authors share their strategies, methodologies, tools and best practices for designing and building a continuous, automated and scalable ML pipeline that delivers business value. Application pipeline development (intercept requests, process data, inference, and so on).

AWS Machine Learning Blog

DECEMBER 5, 2023

New and powerful large language models (LLMs) are changing businesses rapidly, improving efficiency and effectiveness for a variety of enterprise use cases. Speed is of the essence, and adoption of LLM technologies can make or break a business’s competitive advantage.

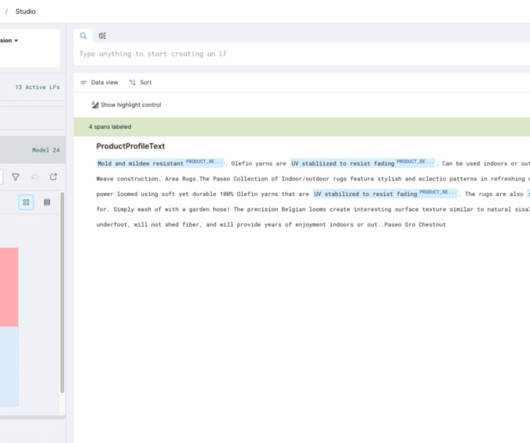

Snorkel AI

AUGUST 9, 2023

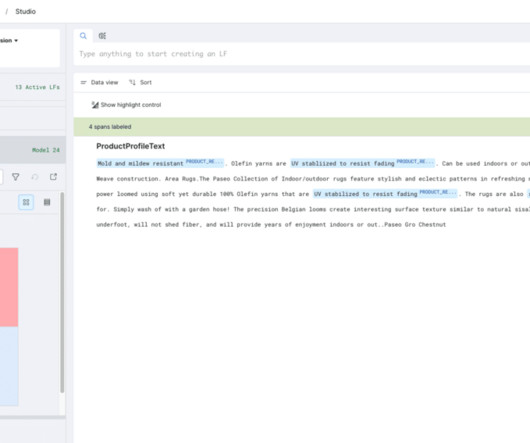

However, as enterprises begin to look beyond proof-of-concept demos and toward deploying LLM-powered applications on business-critical use cases, they’re learning that these models (often appropriately called “ foundation models ”) are truly foundations, rather than the entire house. is currently the state-of-the-art LLM. Handcrafted.

Snorkel AI

AUGUST 9, 2023

However, as enterprises begin to look beyond proof-of-concept demos and toward deploying LLM-powered applications on business-critical use cases, they’re learning that these models (often appropriately called “ foundation models ”) are truly foundations, rather than the entire house. is currently the state-of-the-art LLM. Handcrafted.

Iguazio

OCTOBER 24, 2023

We chose them because they provide practical information that can be implemented today in your environments and organizations, along with a forward-thinking approach that discusses ideas, considerations and opportunities for the future. A Background to LLMs and Intro to PaLM 2: A Smaller, Faster and More Capable LLM Mon.,

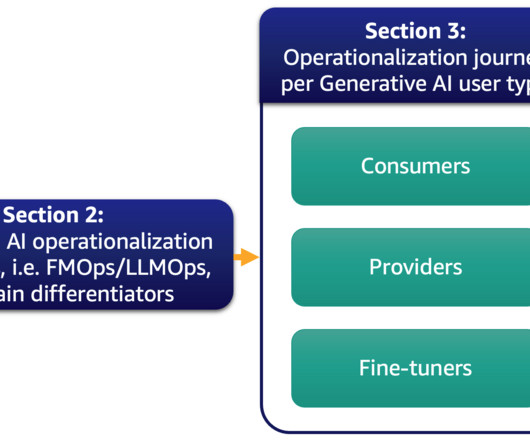

AWS Machine Learning Blog

SEPTEMBER 1, 2023

Furthermore, we deep dive on the most common generative AI use case of text-to-text applications and LLM operations (LLMOps), a subset of FMOps. Data science team – Data scientists need to focus on creating the best model based on predefined key performance indicators (KPIs) working in notebooks.

AWS Machine Learning Blog

FEBRUARY 6, 2024

In this solution, we fine-tune a variety of models on Hugging Face that were pre-trained on medical data and use the BioBERT model, which was pre-trained on the Pubmed dataset and performs the best out of those tried. We implemented the solution using the AWS Cloud Development Kit (AWS CDK). Another column for the label class.

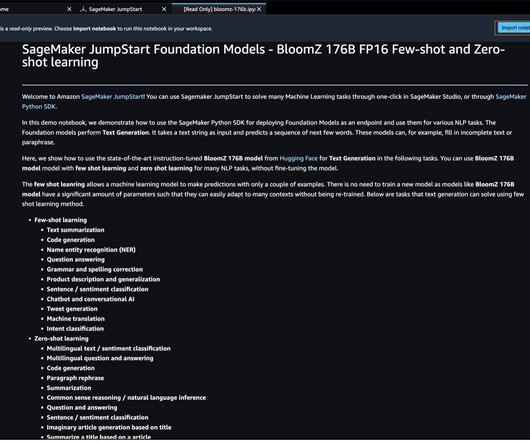

AWS Machine Learning Blog

AUGUST 14, 2023

With SageMaker JumpStart, ML practitioners can choose from a growing list of best performing and publicly available foundation models (FMs) such as BLOOM , Llama 2 , Falcon-40B , Stable Diffusion , OpenLLaMA , Flan-T5 / UL2 , or FMs from Cohere and LightOn. You can also access the foundation models thru Amazon SageMaker Studio.

AWS Machine Learning Blog

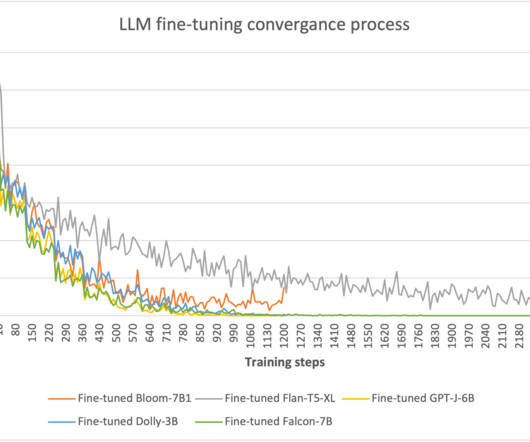

AUGUST 1, 2023

Fine-tuning is the process by which a pre-trained model is given another more domain-specific dataset in order to enhance its performance on a specific task. Jurassic-2 Grande Instruct is a large language model (LLM) by AI21 Labs, optimized for natural language instructions and applicable to various language tasks.

AWS Machine Learning Blog

JULY 24, 2023

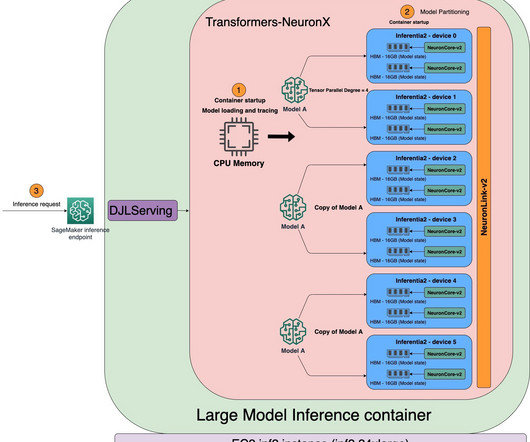

When deploying Deep Learning models at scale, it is crucial to effectively utilize the underlying hardware to maximize performance and cost benefits. Our objective is to achieve highest performance at lowest cost through maximum utilization of the hardware. This allows us to handle more inference requests with fewer accelerators.

AWS Machine Learning Blog

SEPTEMBER 21, 2023

In particular, we provide practical best practices for different customization scenarios, including training models from scratch, fine-tuning with additional data using full or parameter-efficient techniques, Retrieval Augmented Generation (RAG), and prompt engineering.

The MLOps Blog

MARCH 12, 2024

TL;DR LLMOps involves managing the entire lifecycle of Large Language Models (LLMs), including data and prompt management, model fine-tuning and evaluation, pipeline orchestration, and LLM deployment. Retrieval Augmented Generation (RAG) enables LLMs to extract and synthesize information like an advanced search engine.

AWS Machine Learning Blog

DECEMBER 13, 2023

We use the AWS Neuron software development kit (SDK) to access the AWS Inferentia2 device and benefit from its high performance. We then use a large model inference container powered by Deep Java Library (DJLServing) as our model serving solution. In this post, we use the Large Model Inference Container for Neuron.

AWS Machine Learning Blog

MAY 7, 2024

What makes LLMs so transformative, however, is their ability to achieve state-of-the-art results on these common tasks with minimal data and simple prompting, and their ability to multitask. This post walks through examples of building information extraction use cases by combining LLMs with prompt engineering and frameworks such as LangChain.

AWS Machine Learning Blog

MARCH 13, 2024

It achieves better performance compared to other publicly available models of similar or larger scales across different domains, including question answering, commonsense reasoning, mathematics and science, and coding. You will also find a Deploy button, which takes you to a landing page where you can test inference with an example payload.

AWS Machine Learning Blog

OCTOBER 24, 2023

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) through easy-to-use APIs. You can use LLMs in one or all phases of IDP depending on the use case and desired outcome. In this architecture, LLMs are used to perform specific tasks within the IDP workflow.

AWS Machine Learning Blog

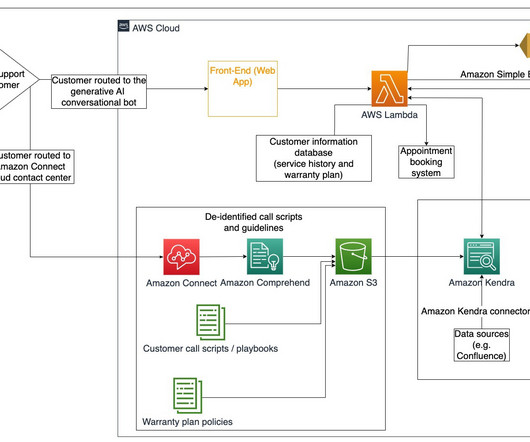

SEPTEMBER 27, 2023

The Amazon EU Design and Construction (Amazon D&C) team is the engineering team designing and constructing Amazon Warehouses across Europe and the MENA region. These requests range from simple retrieval of baseline design values, to review of value engineering proposals, to analysis of reports and compliance checks.

AWS Machine Learning Blog

SEPTEMBER 14, 2023

The following risks and limitations are associated with LLM based queries that a RAG approach with Amazon Kendra addresses: Hallucinations and traceability – LLMS are trained on large data sets and generate responses on probabilities. Running LLMs can require substantial computational resources, which may increase operational costs.

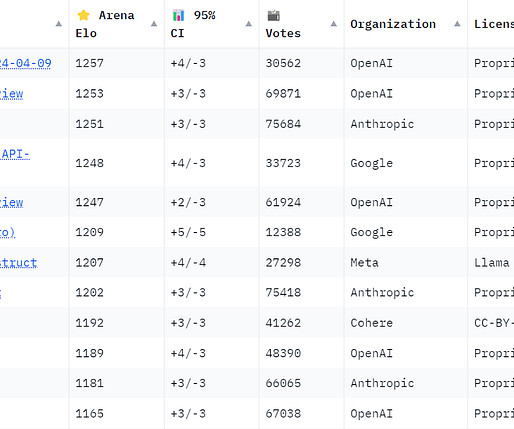

Towards AI

APRIL 30, 2024

In the popular lmsys LLM arena and leaderboard, LLama 70GB scores second to only the latest GPT-4 Turbo on English text-based prompts. For the smaller 3.8GB Phi-3 model from Microsoft, feedback has been mixed with more skepticism on its real-world performance relative to benchmarks.

Hacker News

MAY 3, 2023

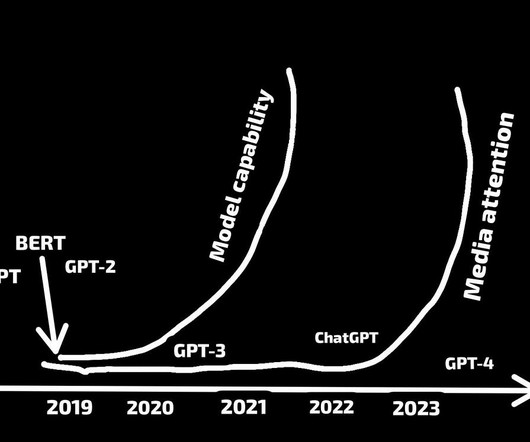

A New Era of Language Intelligence At its essence, ChatGPT belongs to a class of AI systems called Large Language Models , which can perform an outstanding variety of cognitive tasks involving natural language. As it turns out, the effectiveness of LMs in performing various tasks is largely influenced by the size of their architectures.

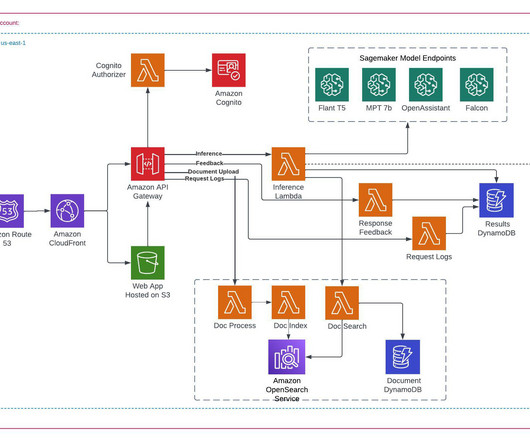

AWS Machine Learning Blog

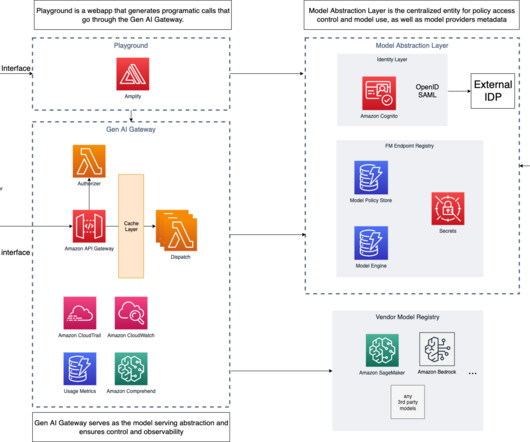

AUGUST 16, 2023

In this post, we discuss how Thomson Reuters Labs created Open Arena, Thomson Reuters’s enterprise-wide large language model (LLM) playground that was developed in collaboration with AWS. These comprehensive tools were instrumental in ensuring the fast and seamless deployment of our LLMs. Can these models handle long documents?

AWS Machine Learning Blog

MARCH 28, 2024

With the advancements being made with LLMs like the Mixtral-8x7B Instruct , derivative of architectures such as the mixture of experts (MoE) , customers are continuously looking for ways to improve the performance and accuracy of generative AI applications while allowing them to effectively use a wider range of closed and open source models.

AWS Machine Learning Blog

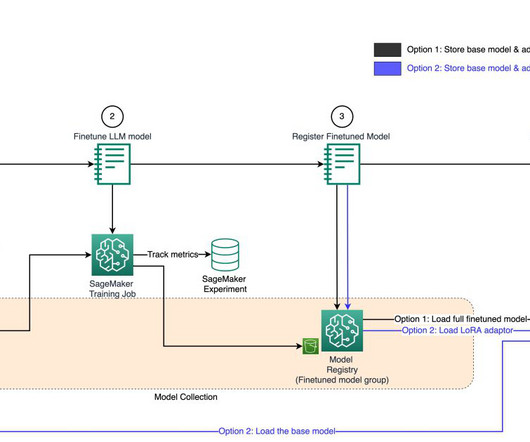

NOVEMBER 14, 2023

In this post, we walk through best practices for managing LoRA fine-tuned models on Amazon SageMaker to address this emerging question. Working with FMs on SageMaker Model Registry In this post, we walk through an end-to-end example of fine-tuning the Llama2 large language model (LLM) using the QLoRA method.

AWS Machine Learning Blog

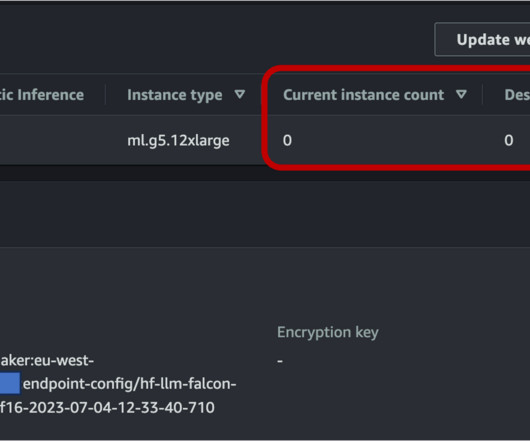

SEPTEMBER 5, 2023

as the engines that power the generative AI innovation. For example, the TII Falcon-40B Instruct model requires at least an ml.g5.12xlarge instance to be loaded into memory successfully, but performs best with bigger instances. To learn more about the different deployment options, refer to Deploy models for Inference.

AWS Machine Learning Blog

NOVEMBER 22, 2023

Hear best practices for using unstructured (video, image, PDF), semi-structured (Parquet), and table-formatted (Iceberg) data for training, fine-tuning, checkpointing, and prompt engineering. Join this session to learn which FM is best suited for your use case. Reserve your seat now! or “Because you watched.”

AWS Machine Learning Blog

DECEMBER 20, 2023

Llama Guard provides input and output safeguards in large language model (LLM) deployment. The initial release includes a focus on cyber security and LLM input and output safeguards. Llama Guard model Llama Guard is a new model from Meta that provides input and output guardrails for LLM deployments.

AWS Machine Learning Blog

OCTOBER 11, 2023

Despite the abundance of options for serving LLMs, this is a hard question to answer due to the size of the models, varying model architectures, performance requirements of applications, and more. The LMI container has a powerful serving stack called DJL serving that is agnostic to the underlying LLM.

AWS Machine Learning Blog

APRIL 29, 2024

In 2024, however, organizations are using large language models (LLMs), which require relatively little focus on NLP, shifting research and development from modeling to the infrastructure needed to support LLM workflows. This often means the method of using a third-party LLM API won’t do for security, control, and scale reasons.

The MLOps Blog

JUNE 27, 2023

Alignment to other tools in the organization’s tech stack Consider how well the MLOps tool integrates with your existing tools and workflows, such as data sources, data engineering platforms, code repositories, CI/CD pipelines, monitoring systems, etc. For example, neptune.ai and Pandas or Apache Spark DataFrames.

Heartbeat

MAY 29, 2023

LLMs like GPT-3 and T5 have already shown promising results in various NLP tasks such as language translation, question-answering, and summarization. However, LLMs are complex, and training and improving them require specific skills and knowledge. LLMs rely on vast amounts of text data to learn patterns and generate coherent text.

AWS Machine Learning Blog

SEPTEMBER 28, 2023

For example: The state-of-the-art (SOTA) of models, architectures, and best practices are constantly changing. This means companies need loose coupling between app clients (model consumers) and model inference endpoints, which ensures easy switch among large language model (LLM), vision, or multi-modal endpoints if needed.

AWS Machine Learning Blog

APRIL 16, 2024

They then use SQL to explore, analyze, visualize, and integrate data from various sources before using it in their ML training and inference. This new feature enables you to perform various functions. For security best practices, it’s recommended to use Secrets Manager to securely store sensitive information such as passwords.

AWS Machine Learning Blog

NOVEMBER 15, 2023

Llama 2 demonstrates the potential of large language models (LLMs) through its refined abilities and precisely tuned performance. In this post, we explore best practices for prompting the Llama 2 Chat LLM. We highlight key prompt design approaches and methodologies by providing practical examples.

AWS Machine Learning Blog

AUGUST 15, 2023

Whisper is a multitasking speech recognition model that can perform multilingual speech recognition, speech translation, and language identification. To handle large audio data, we adopt transformers.pipeline to run inference with Whisper. You can refer to the following GitHub example when choosing this option.

AWS Machine Learning Blog

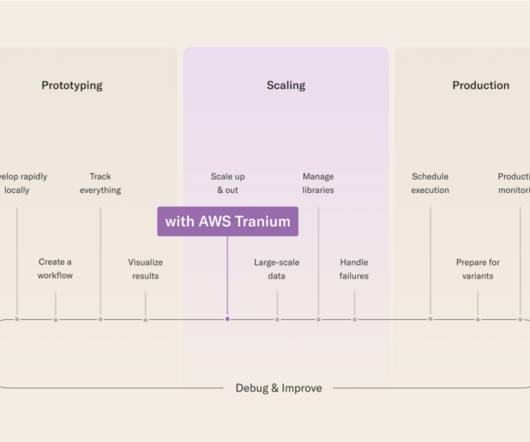

MAY 1, 2024

Llama2 by Meta is an example of an LLM offered by AWS. In this post, we explore how you can use the Neuron distributed training library to fine-tune, continuously pre-train, and reduce the cost of training LLMs such as Llama 2 with AWS Trainium instances on Amazon SageMaker.

AWS Machine Learning Blog

MAY 4, 2023

However, their increasing complexity also comes with high costs for inference and a growing need for powerful compute resources. The high cost of inference for generative AI models can be a barrier to entry for businesses and researchers with limited resources, necessitating the need for more efficient and cost-effective solutions.

Heartbeat

DECEMBER 20, 2023

This level of interaction is made possible through prompt engineering, a fundamental aspect of fine-tuning language models. By carefully choosing prompts, we can shape their behavior and enhance their performance in specific tasks. In contrast, general-purpose prompts are versatile and can be applied across various tasks and domains.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content