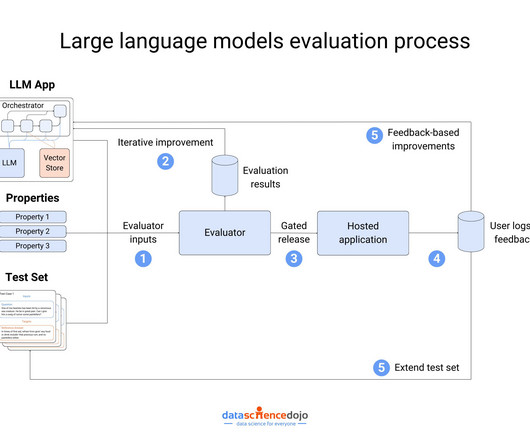

Large language models: A complete guide to understanding LLMs

Data Science Dojo

APRIL 18, 2024

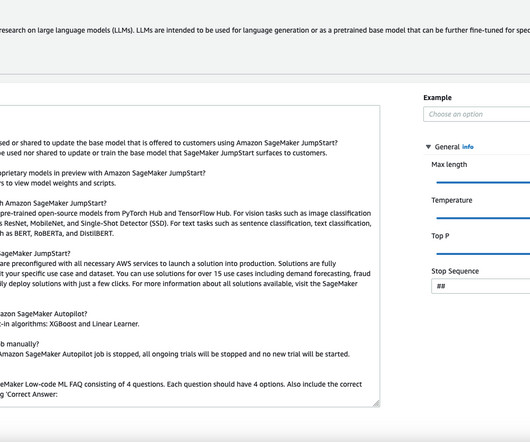

Large language models are powerful AI-powered language tools trained on massive amounts of text data, like books, articles, and even code. Speak many languages Since language is the area of expertise for LLMs, the models are trained to work with multiple languages. billion parameter model developed by Mistral AI.

Let's personalize your content