Generative AI and multi-modal agents in AWS: The key to unlocking new value in financial markets

AWS Machine Learning Blog

SEPTEMBER 19, 2023

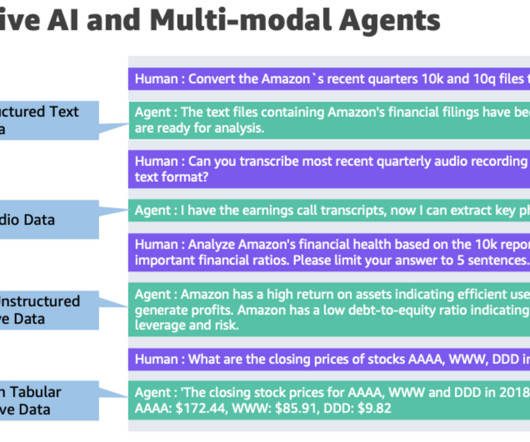

Financial organizations generate, collect, and use this data to gain insights into financial operations, make better decisions, and improve performance. One of the ways to handle multi-modal data that is gaining popularity is the use of multi-modal agents. Detecting fraudulent collusion across data types requires multi-modal analysis.

Let's personalize your content