How to make data lakes reliable

Dataconomy

FEBRUARY 21, 2020

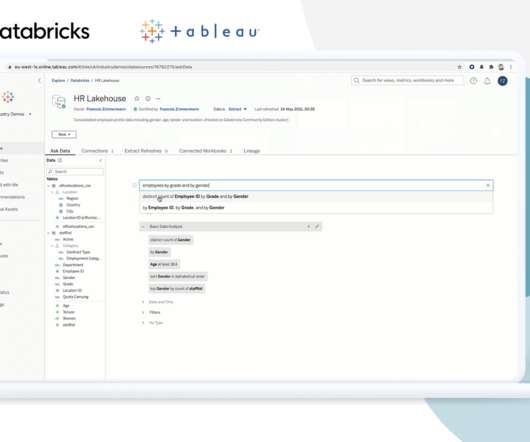

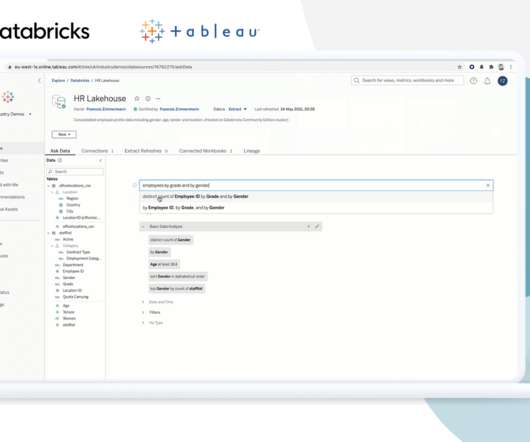

High quality, reliable data forms the backbone for all successful data endeavors, from reporting and analytics to machine learning. Delta Lake is an open-source storage layer that solves many concerns around data. The post How to make data lakes reliable appeared first on Dataconomy.

Let's personalize your content