Arthur Unveils Bench: An AI Tool for Finding the Best Language Models for the Job

Analytics Vidhya

AUGUST 21, 2023

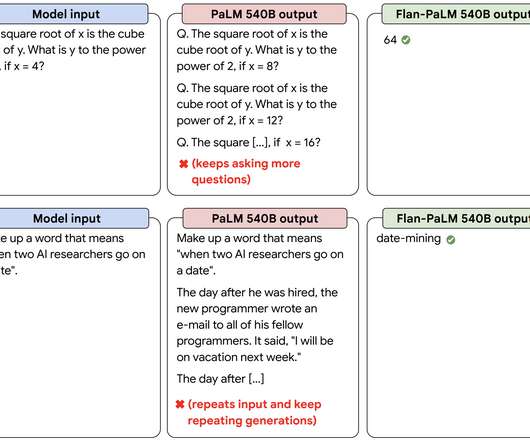

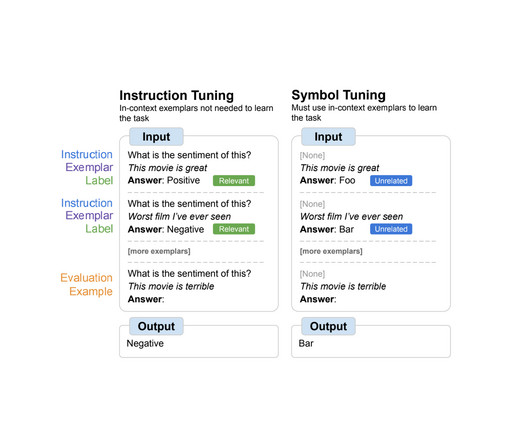

With a flourish […] The post Arthur Unveils Bench: An AI Tool for Finding the Best Language Models for the Job appeared first on Analytics Vidhya. As the buzz around generative AI grows, Arthur steps up to the plate with a revolutionary solution set to change the game for companies seeking the best language models for their jobs.

Let's personalize your content