Building an ETL Data Pipeline Using Azure Data Factory

Analytics Vidhya

JUNE 15, 2022

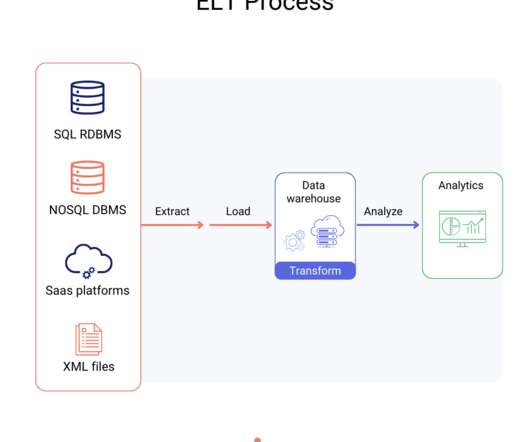

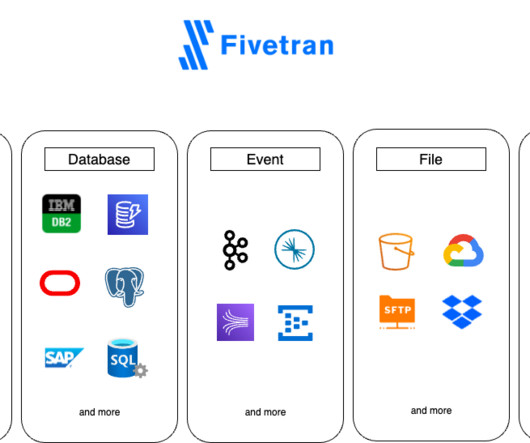

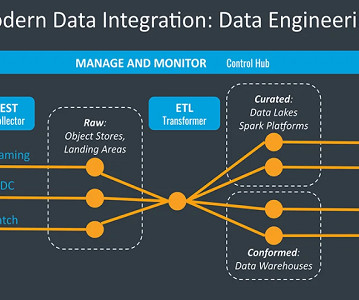

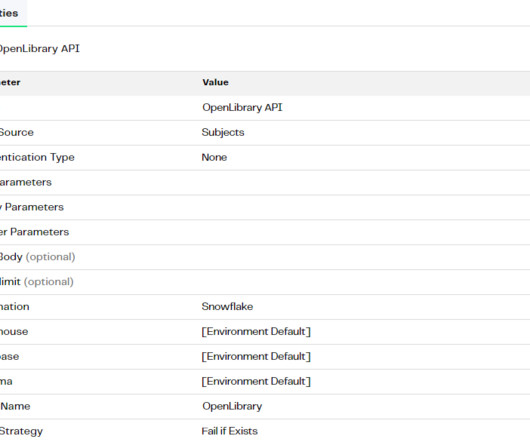

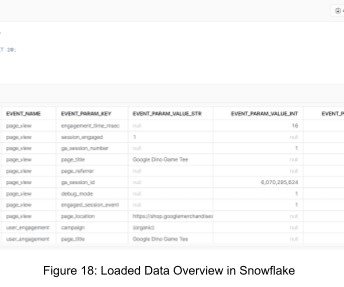

Introduction ETL is the process that extracts the data from various data sources, transforms the collected data, and loads that data into a common data repository. Azure Data Factory […]. The post Building an ETL Data Pipeline Using Azure Data Factory appeared first on Analytics Vidhya.

Let's personalize your content