Reduce energy consumption of your machine learning workloads by up to 90% with AWS purpose-built accelerators

JUNE 20, 2023

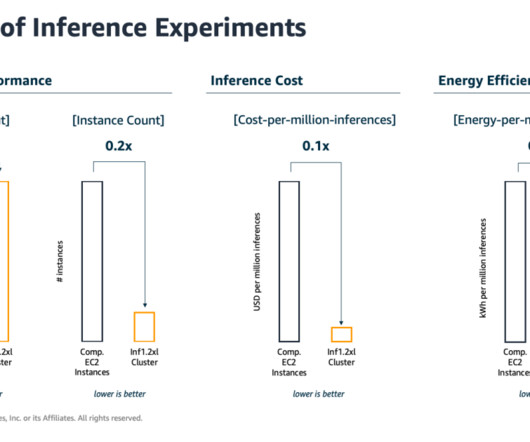

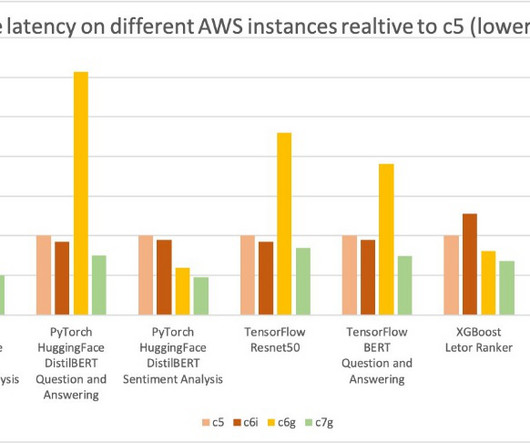

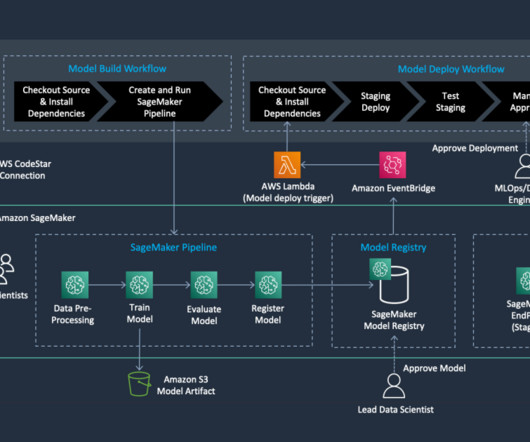

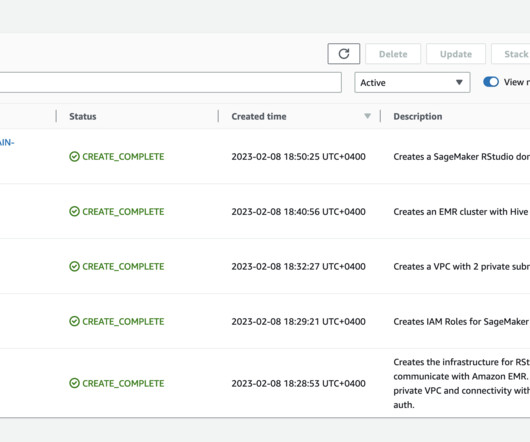

Machine learning (ML) engineers have traditionally focused on striking a balance between model training and deployment cost vs. performance. There are several ways AWS is enabling ML practitioners to lower the environmental impact of their workloads. The results are presented in the following figure.

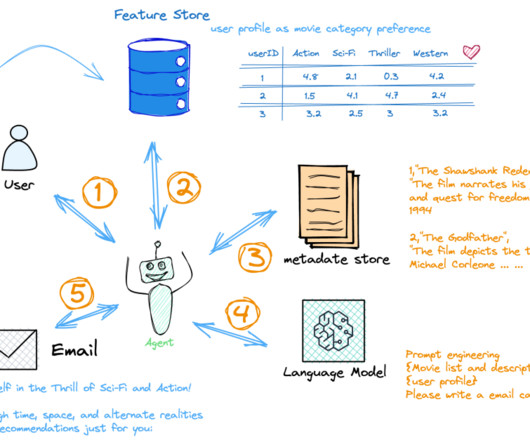

Let's personalize your content