Top 10 AI and Data Science Trends in 2022

Analytics Vidhya

FEBRUARY 3, 2022

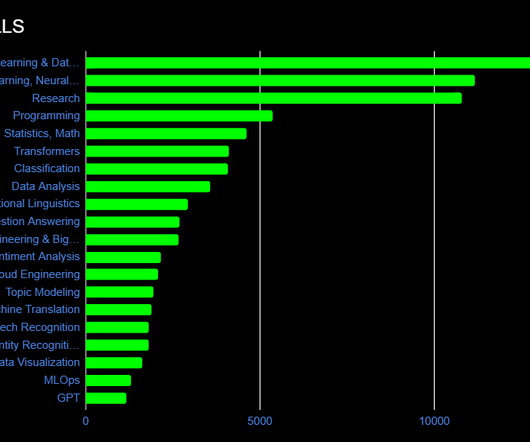

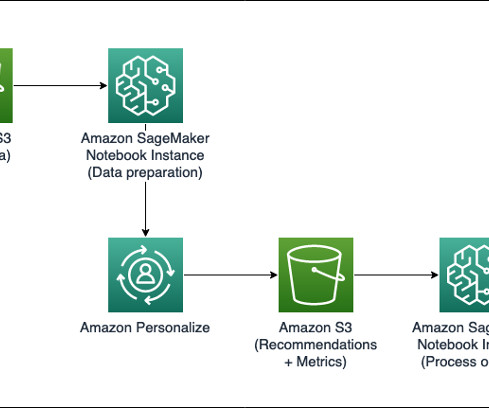

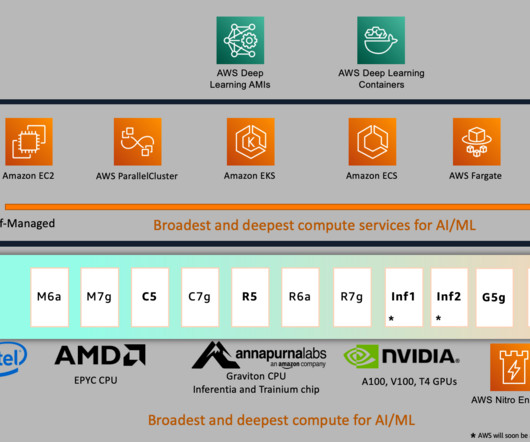

In this article, we shall discuss the upcoming innovations in the field of artificial intelligence, big data, machine learning and overall, Data Science Trends in 2022. Deep learning, natural language processing, and computer vision are examples […].

Let's personalize your content