How to Launch First Amazon Elastic MapReduce (EMR)?

Analytics Vidhya

JANUARY 11, 2023

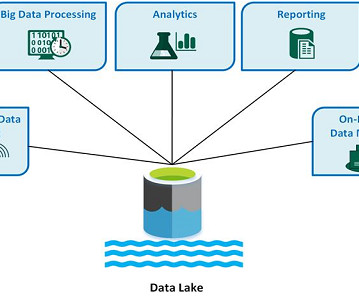

Introduction Amazon Elastic MapReduce (EMR) is a fully managed service that makes it easy to process large amounts of data using the popular open-source framework Apache Hadoop. EMR enables you to run petabyte-scale data warehouses and analytics workloads using the Apache Spark, Presto, and Hadoop ecosystems.

Let's personalize your content