AWS Redshift: Cloud Data Warehouse Service

Analytics Vidhya

APRIL 25, 2022

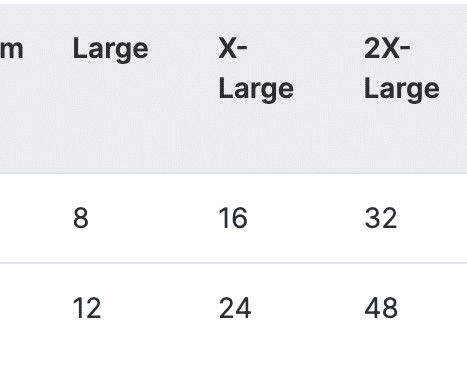

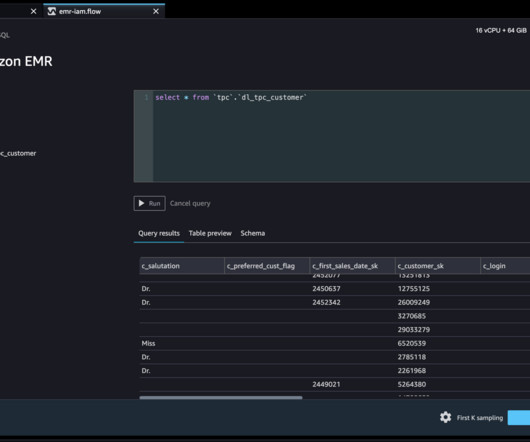

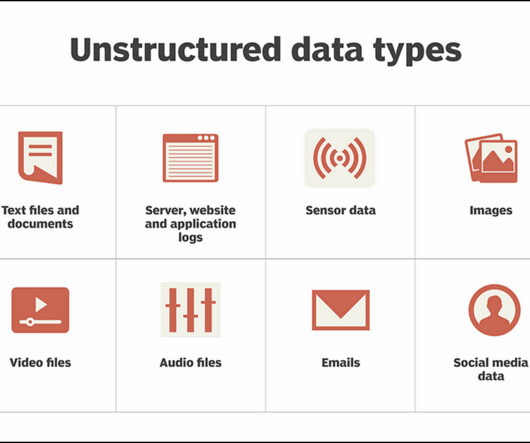

Companies may store petabytes of data in easy-to-access “clusters” that can be searched in parallel using the platform’s storage system. The post AWS Redshift: Cloud Data Warehouse Service appeared first on Analytics Vidhya. The datasets range in size from a few 100 megabytes to a petabyte. […].

Let's personalize your content