Reduce energy consumption of your machine learning workloads by up to 90% with AWS purpose-built accelerators

JUNE 20, 2023

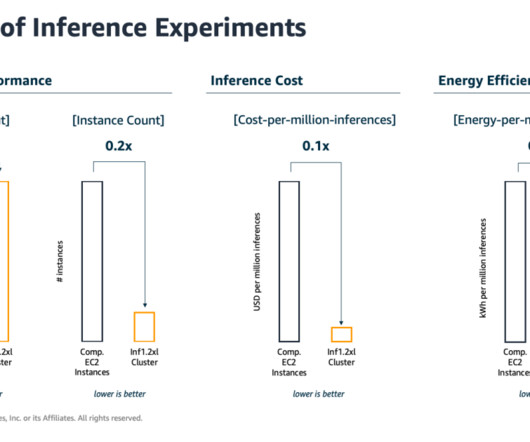

Machine learning (ML) engineers have traditionally focused on striking a balance between model training and deployment cost vs. performance. For reference, GPT-3, an earlier generation LLM has 175 billion parameters and requires months of non-stop training on a cluster of thousands of accelerated processors.

Let's personalize your content