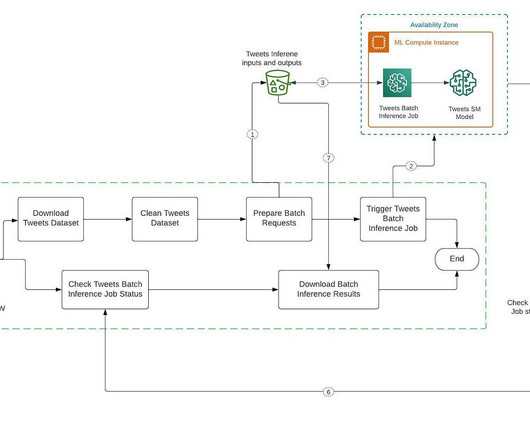

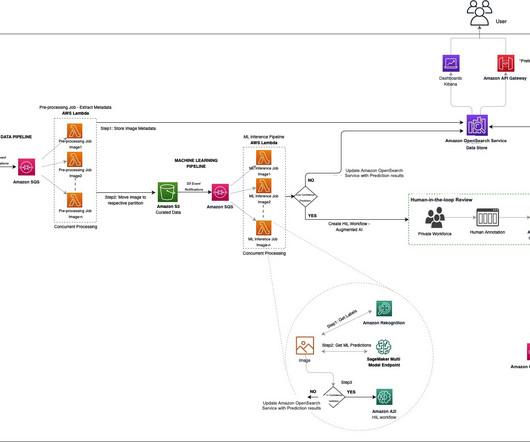

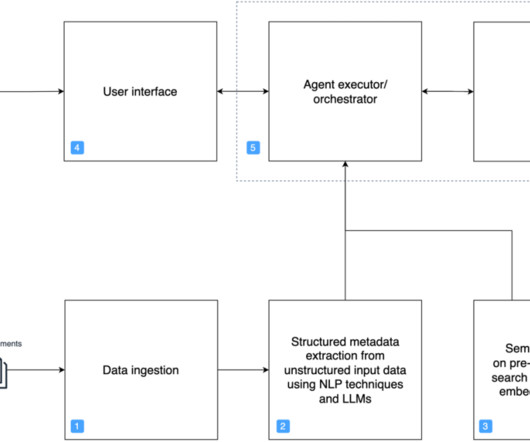

Building an End-to-End Data Pipeline on AWS: Embedded-Based Search Engine

Analytics Vidhya

MAY 26, 2023

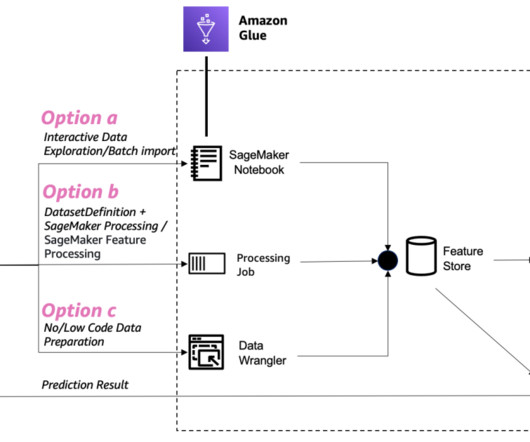

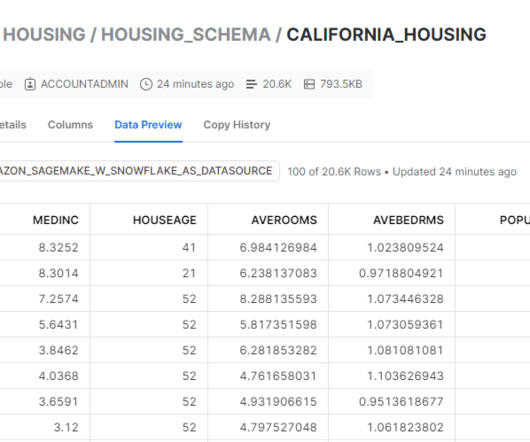

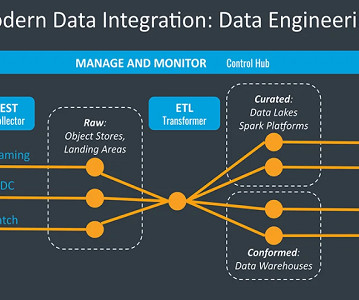

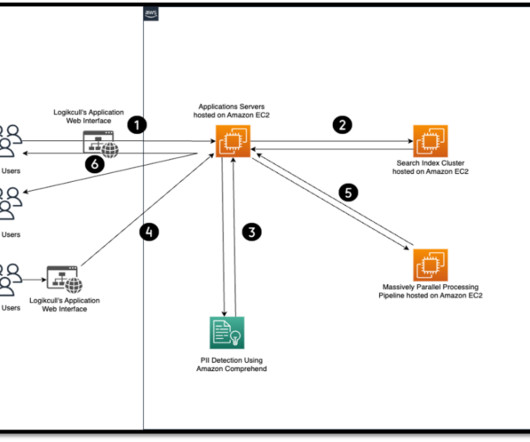

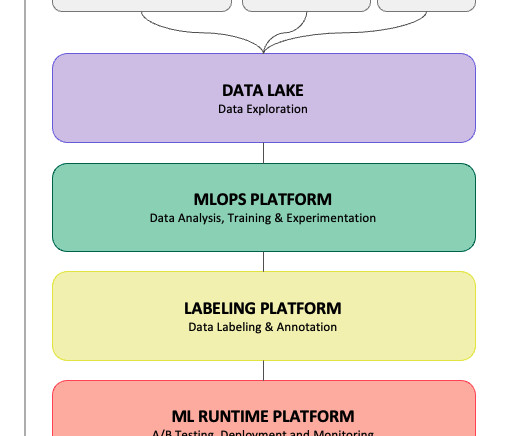

Introduction Discover the ultimate guide to building a powerful data pipeline on AWS! In today’s data-driven world, organizations need efficient pipelines to collect, process, and leverage valuable data. With AWS, you can unleash the full potential of your data.

Let's personalize your content