A Quick Guide to Fine-tuning Techniques for Large Language Models

Mlearning.ai

JULY 18, 2023

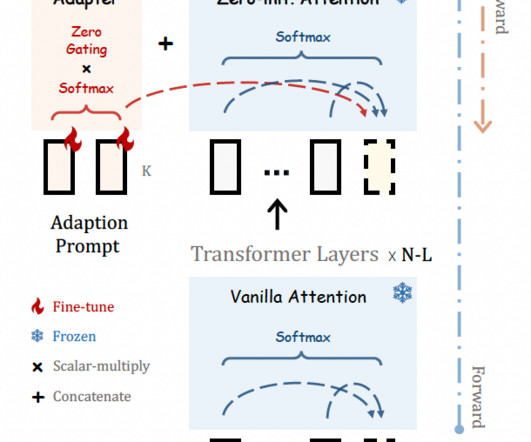

The authors also found the PREFIX setting ( context → x → y ) works better than the INFIX setting ( x → context → y ), as the context can influence both input x and output y. The authors set a gate g to determine how much prompting information will go to the next step. Language models are few-shot learners.

Let's personalize your content