No "Zero-Shot" Without Exponential Data

Hacker News

MAY 9, 2024

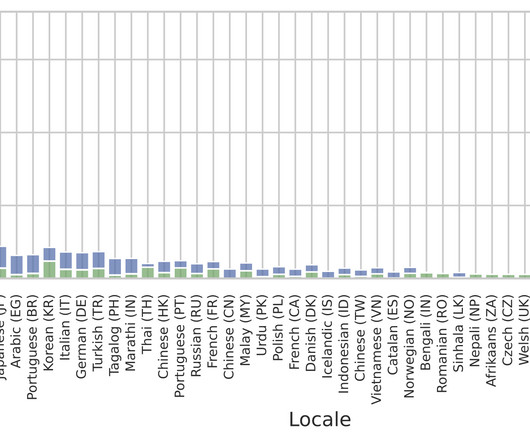

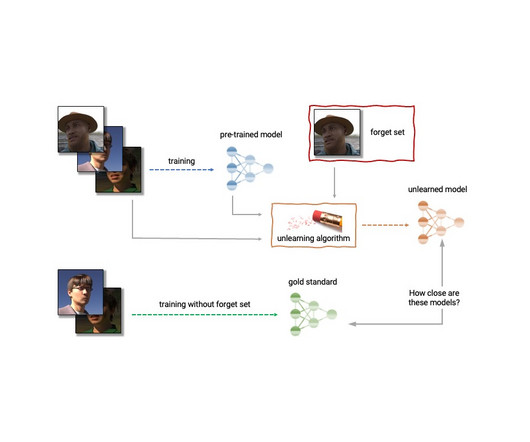

Web-crawled pretraining datasets underlie the impressive "zero-shot" evaluation performance of multimodal models, such as CLIP for classification/retrieval and Stable-Diffusion for image generation.

Let's personalize your content