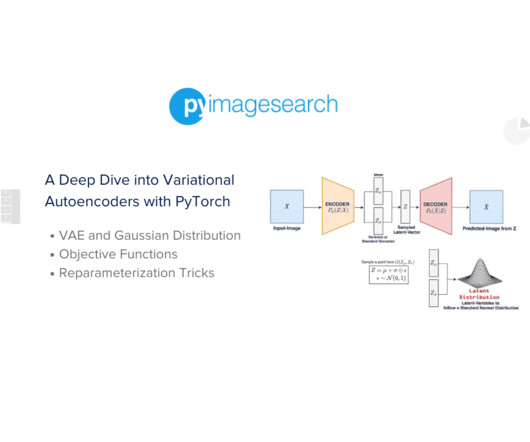

A Deep Dive into Variational Autoencoders with PyTorch

PyImageSearch

OCTOBER 2, 2023

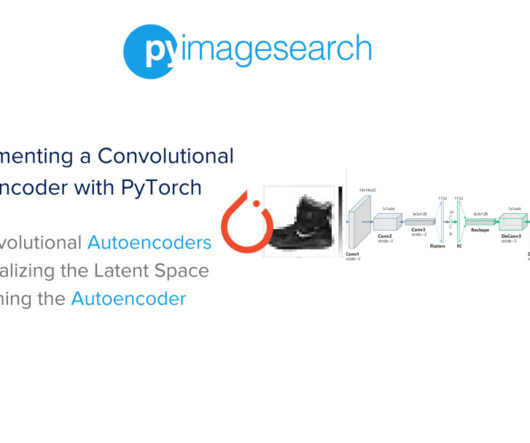

We’ll start by unraveling the foundational concepts, exploring the roles of the encoder and decoder, and drawing comparisons between the traditional Convolutional Autoencoder (CAE) and the VAE. Using the renowned Fashion-MNIST dataset, we’ll guide you through understanding its nuances. Let’s get started!

Let's personalize your content