A Detailed Guide of Interview Questions on Apache Kafka

Analytics Vidhya

APRIL 28, 2023

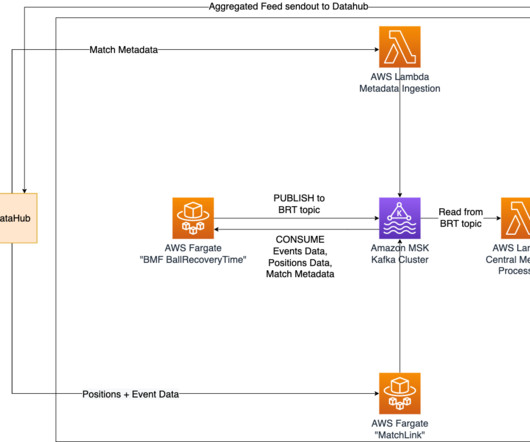

Introduction Apache Kafka is an open-source publish-subscribe messaging application initially developed by LinkedIn in early 2011. It is a famous Scala-coded data processing tool that offers low latency, extensive throughput, and a unified platform to handle the data in real-time.

Let's personalize your content