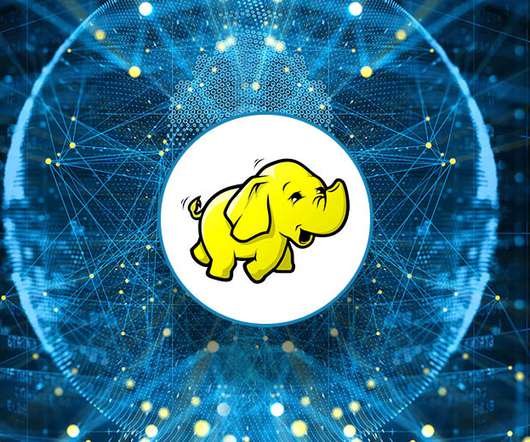

A Dive into the Basics of Big Data Storage with HDFS

Analytics Vidhya

FEBRUARY 6, 2023

Introduction HDFS (Hadoop Distributed File System) is not a traditional database but a distributed file system designed to store and process big data. It is a core component of the Apache Hadoop ecosystem and allows for storing and processing large datasets across multiple commodity servers.

Let's personalize your content