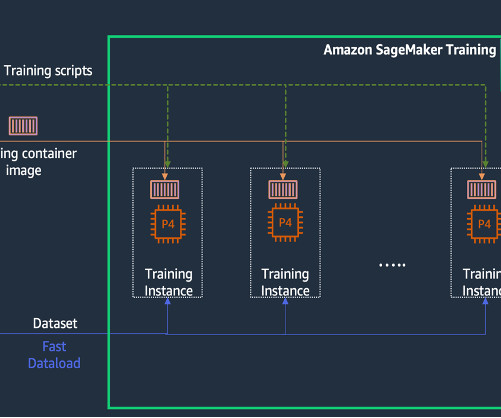

Accelerating large-scale neural network training on CPUs with ThirdAI and AWS Graviton

AWS Machine Learning Blog

FEBRUARY 29, 2024

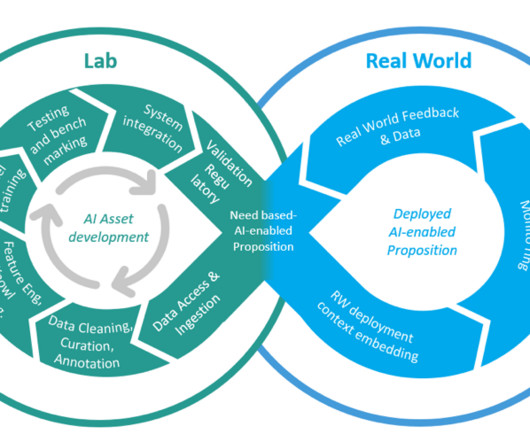

Large-scale deep learning has recently produced revolutionary advances in a vast array of fields. is a startup dedicated to the mission of democratizing artificial intelligence technologies through algorithmic and software innovations that fundamentally change the economics of deep learning.

Let's personalize your content