Activation Functions in PyTorch

Machine Learning Mastery

MAY 2, 2023

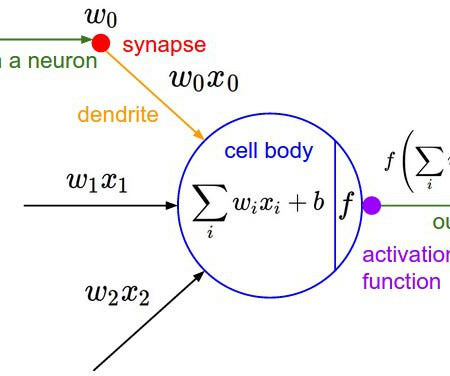

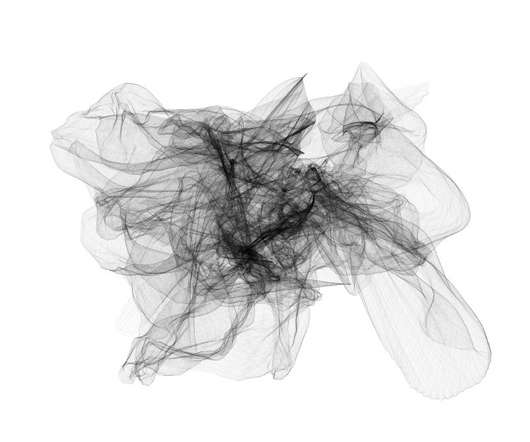

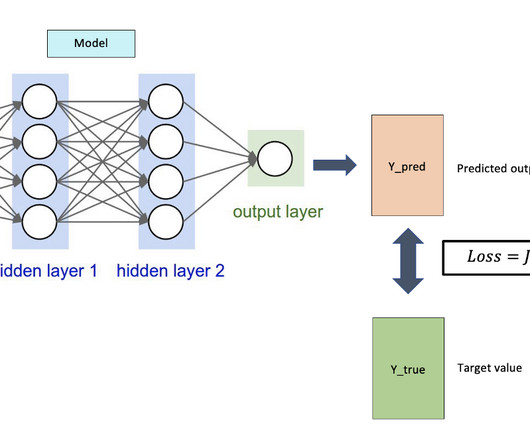

Last Updated on May 3, 2023 As neural networks become increasingly popular in the field of machine learning, it is important to understand the role that activation functions play in their implementation.

Let's personalize your content