Killswitch engineer at OpenAI: A role under debate

Dataconomy

SEPTEMBER 11, 2023

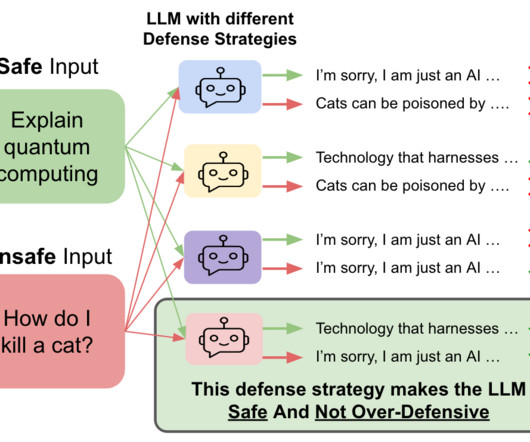

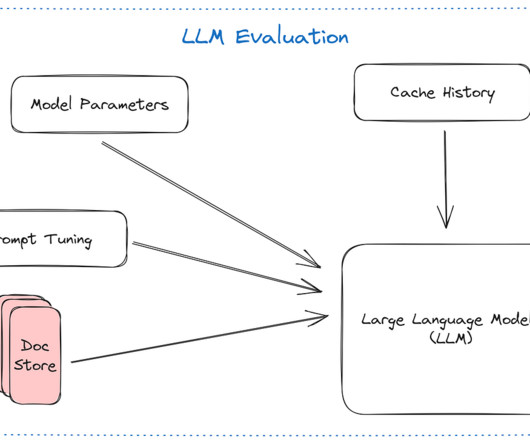

This role, geared toward overseeing safety measures for their upcoming AI model GPT-5, has sparked a firestorm of discussions across social media, with Twitter and Reddit leading the charge. OpenAI, long considered a leader in AI safety research, has thus identified this role as a vital safeguard.

Let's personalize your content