Avery Smith’s 90-Day Blueprint: Fast-Track to Landing a Data Job

Towards AI

FEBRUARY 13, 2024

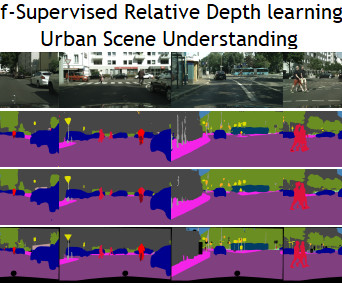

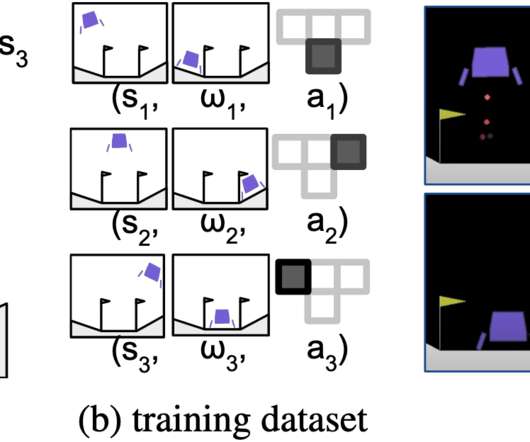

Louis-François Bouchard in What is Artificial Intelligence Introduction to self-supervised learning·4 min read·May 27, 2020 80 … Read the full blog for free on Medium. Author(s): Louis-François Bouchard Originally published on Towards AI. Join thousands of data leaders on the AI newsletter.

Let's personalize your content