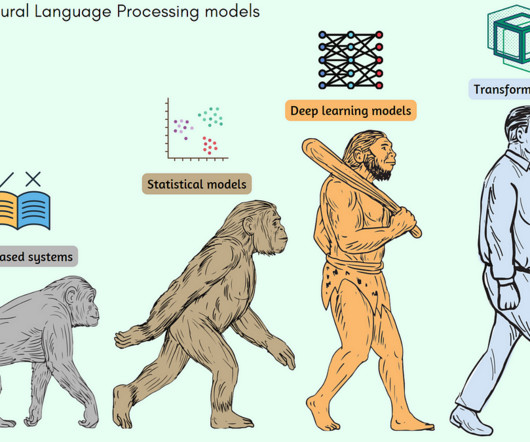

Transformer Models: The future of Natural Language Processing

Data Science Dojo

AUGUST 16, 2023

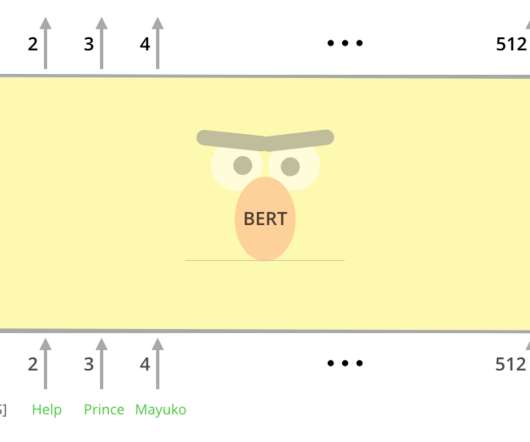

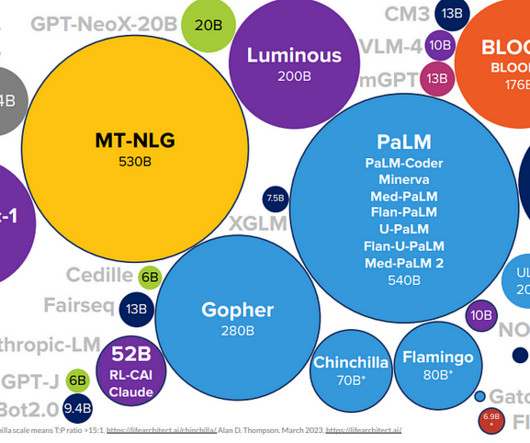

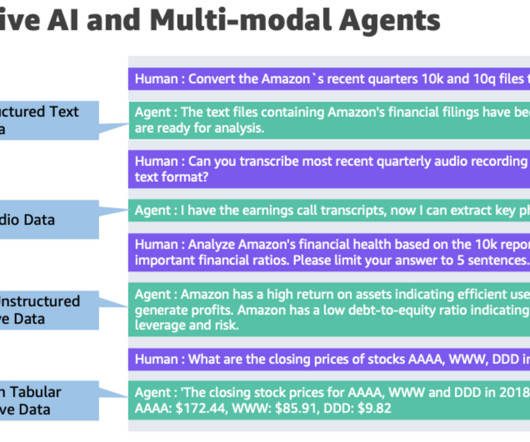

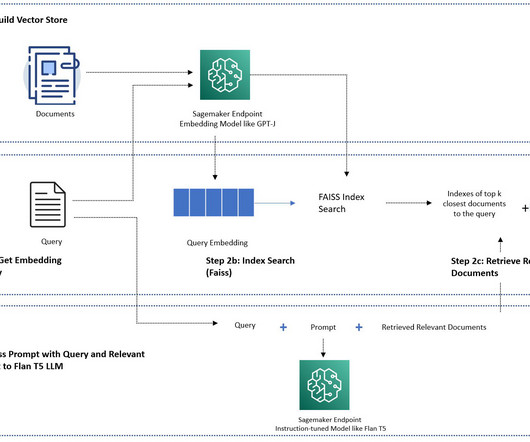

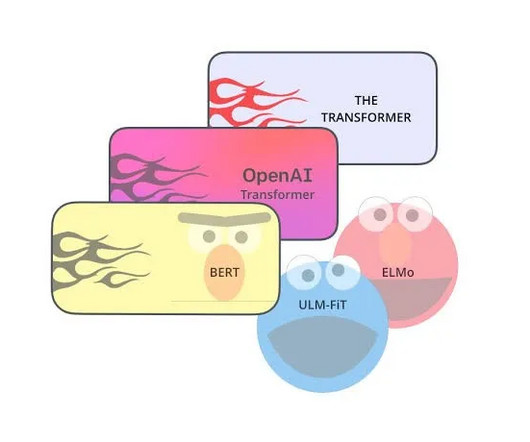

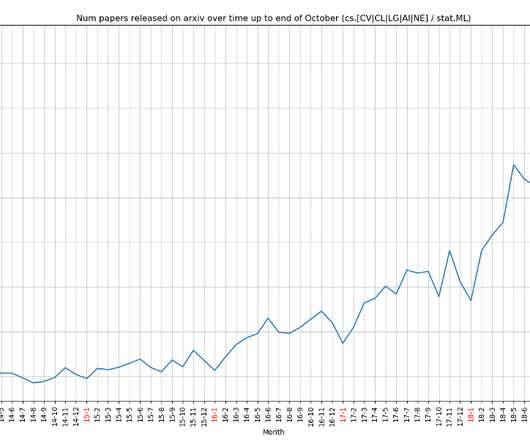

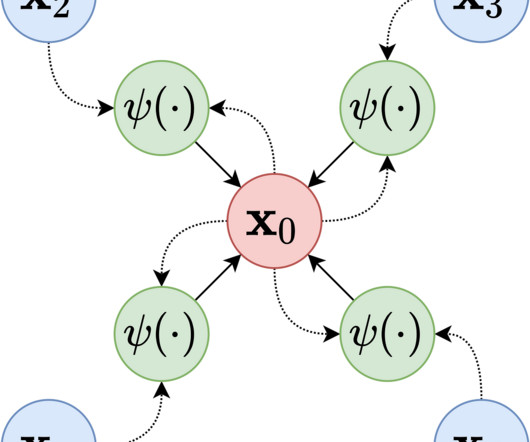

Transformer models are a type of deep learning model that are used for natural language processing (NLP) tasks. Learn more about NLP in this blog —-> Applications of Natural Language Processing The transformer has been so successful because it is able to learn long-range dependencies between words in a sentence.

Let's personalize your content