Transformer Models: The future of Natural Language Processing

Data Science Dojo

AUGUST 16, 2023

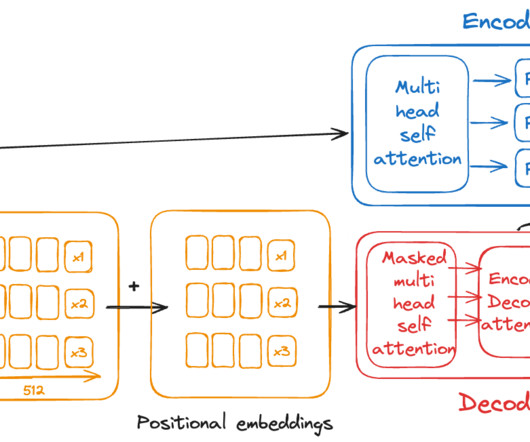

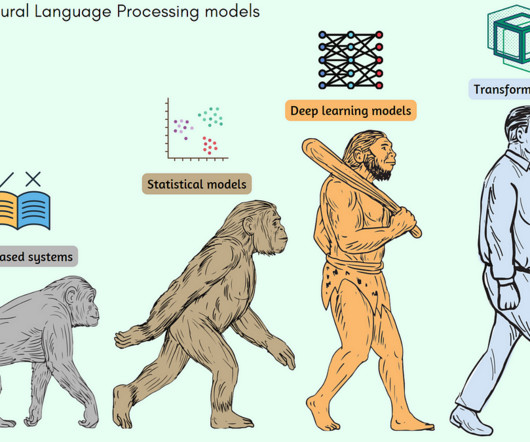

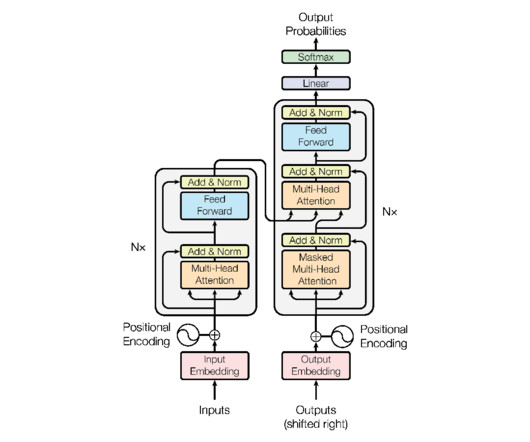

Transformer models are a type of deep learning model that are used for natural language processing (NLP) tasks. In 2017, Vaswani et al. 2019: Transformers are used to create large language models (LLMs) such as BERT and GPT-2. Encoding is the process of converting a sequence of words into a sequence of vectors.

Let's personalize your content