Train self-supervised vision transformers on overhead imagery with Amazon SageMaker

AWS Machine Learning Blog

AUGUST 16, 2023

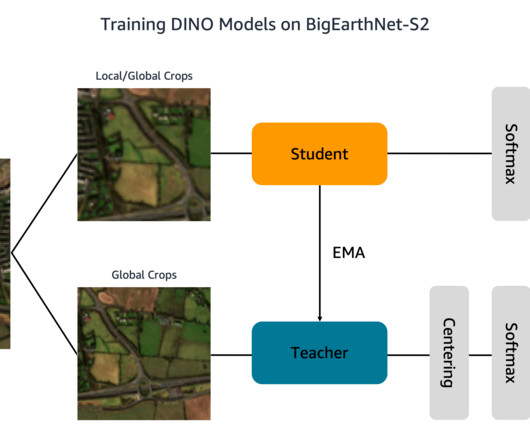

Training machine learning (ML) models to interpret this data, however, is bottlenecked by costly and time-consuming human annotation efforts. One way to overcome this challenge is through self-supervised learning (SSL). Machine Learning Engineer at AWS. The following are a few example RGB images and their labels.

Let's personalize your content