The Illustrated Word2Vec (2019)

Hacker News

APRIL 18, 2024

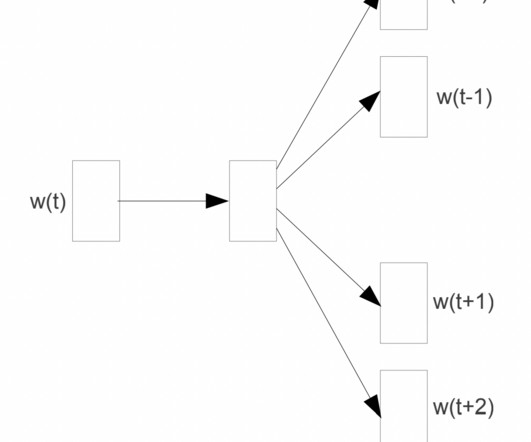

You can find it in the turning of the seasons, in the way sand trails along a ridge, in the branch clusters of the creosote bush or the pattern of its leaves. Word2vec is a method to efficiently create word embeddings and has been around since 2013. Yet, it is possible to see peril in the finding of ultimate perfection.

Let's personalize your content