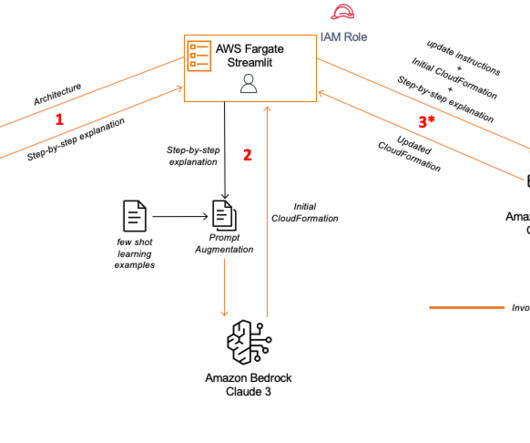

Architecture to AWS CloudFormation code using Anthropic’s Claude 3 on Amazon Bedrock

AWS Machine Learning Blog

SEPTEMBER 27, 2024

We can also gain an understanding of data presented in charts and graphs by asking questions related to business intelligence (BI) tasks, such as “What is the sales trend for 2023 for company A in the enterprise market?” AWS Fargate is the compute engine for web application. This allows you to experiment quickly with new designs.

Let's personalize your content